Thread

Image generation models are causing a sensation worldwide, particularly the powerful Stable Diffusion technique 🔥.

With Stable Diffusion, you can generate images with your laptop, previously impossible 🤩.

Here's how diffusion models work, in plain English:

-A Thread--

🧵

With Stable Diffusion, you can generate images with your laptop, previously impossible 🤩.

Here's how diffusion models work, in plain English:

-A Thread--

🧵

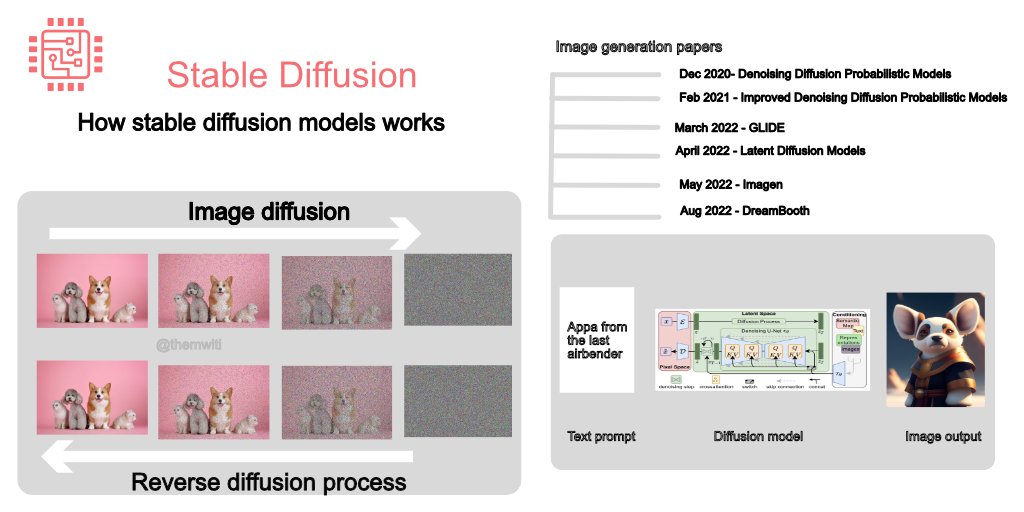

1/ Generating images involves two processes.

Diffusion adds noise gradually to the image until its unrecognizable, and a reversion diffusion process removes the noise.

The models then try to generate new images from the noise image.

Diffusion adds noise gradually to the image until its unrecognizable, and a reversion diffusion process removes the noise.

The models then try to generate new images from the noise image.

2/ Denoising is done using convolutional neural networks such as U-NET.

A U-NET is made up of an encoder for creating the latent representation of the image and a decoder for creating an image from the low-level image representation.

A U-NET is made up of an encoder for creating the latent representation of the image and a decoder for creating an image from the low-level image representation.

3/ Noise is not removed from the image at once but is done gradually for the defined number of steps.

Removing noise step-by-step makes the process of generating images from pure noise easier.

Therefore, the goal is to improve upon the previous step.

Removing noise step-by-step makes the process of generating images from pure noise easier.

Therefore, the goal is to improve upon the previous step.

4/ Generating the image in one step leads to a noisy image.

At each time step, a fraction of the noise and not the entire noise is removed.

The same concept is used in text-to-image generation, where you inject the textual information gradually instead of at once.

At each time step, a fraction of the noise and not the entire noise is removed.

The same concept is used in text-to-image generation, where you inject the textual information gradually instead of at once.

5/ The text information is added by concatenating the text representation from a language model on the image input and also through cross-attention.

Cross-attention enables the CNN attention layers to attend to the text tokens.

Cross-attention enables the CNN attention layers to attend to the text tokens.

6/ Diffusion models are compute-intensive because of the number of steps involved in the denoising process.

This can be solved by training the network with small images and adding a network to upsample the result to larger images.

This can be solved by training the network with small images and adding a network to upsample the result to larger images.

7/ Latent diffusion models (LDM) solve this problem by generating the image in the latent space instead of the image space.

LDMs create a low-dimensional image representation by passing it through an encoder network. Apply noise to the image representation instead of the image.

LDMs create a low-dimensional image representation by passing it through an encoder network. Apply noise to the image representation instead of the image.

8/ The reverse diffusion process works with the low-dimensional image representation instead of the image itself.

This is a less compute-intensive process because the model is not working with the entire image. As a result, you can perform image generation on your laptop.

This is a less compute-intensive process because the model is not working with the entire image. As a result, you can perform image generation on your laptop.

9/9 You can try out stable diffusion models on:

• @huggingface

huggingface.co/spaces/stabilityai/stable-diffusion with Stable Diffusion 2.1

• lexica.art

• dreamstudio.ai

That's it for today. Follow @themwiti for more posts on machine learning and deep learning.

• @huggingface

huggingface.co/spaces/stabilityai/stable-diffusion with Stable Diffusion 2.1

• lexica.art

• dreamstudio.ai

That's it for today. Follow @themwiti for more posts on machine learning and deep learning.

Follow @themwiti for more content on machine learning and deep learning.

Mentions

See All

Akshay 🚀 @akshay_pachaar

·

Mar 14, 2023

Well written thread !!

Jaydeep Karale @_jaydeepkarale

·

Mar 14, 2023

Superb explanation