Thread

Are you still deploying uncompressed ML models in 2023?

STOP.

Apply Gradual Magnitude Pruning (GMP), the current KING in ML model pruning, to reduce the size of large models by 20X without loss of accuracy.

Here's how to apply GMP in 5 steps.

--A Thread--

🧵

STOP.

Apply Gradual Magnitude Pruning (GMP), the current KING in ML model pruning, to reduce the size of large models by 20X without loss of accuracy.

Here's how to apply GMP in 5 steps.

--A Thread--

🧵

Step 1: Train a network

Start by training a network in the normal way.

Start by training a network in the normal way.

Step 2: Stabilize the model

Stabilization is done by retraining the network at a high learning rate, enabling the process to converge to a stable point before pruning starts.

A learning rate that's too high can cause the model to diverge or fail to train during pruning.

Stabilization is done by retraining the network at a high learning rate, enabling the process to converge to a stable point before pruning starts.

A learning rate that's too high can cause the model to diverge or fail to train during pruning.

Step 3: Start pruning

At the start of epoch 1, set the sparsity for all layers to be pruned to 5%.

Epoch values and sparsity will vary based on the model's training process.

Pruning should be done for a third to half of the training time.

At the start of epoch 1, set the sparsity for all layers to be pruned to 5%.

Epoch values and sparsity will vary based on the model's training process.

Pruning should be done for a third to half of the training time.

Step 4: Remove weights iteratively

Iteratively set the weights closest to zero to 0 once per epoch until 90% sparsity is reached at epoch 35.

Why not prune all the weights at once?

Pruning in steps enables the network to regularize and adjust weights to reconverge.

Iteratively set the weights closest to zero to 0 once per epoch until 90% sparsity is reached at epoch 35.

Why not prune all the weights at once?

Pruning in steps enables the network to regularize and adjust weights to reconverge.

Step 5: Hold sparsity constant

Hold the sparsity constant at 90%, continue training, and reduce the learning rate until epoch 60 to ensure the model recovers from any loss suffered during the pruning process.

Fine-tuning is done for less than one-fourth of the training time.

Hold the sparsity constant at 90%, continue training, and reduce the learning rate until epoch 60 to ensure the model recovers from any loss suffered during the pruning process.

Fine-tuning is done for less than one-fourth of the training time.

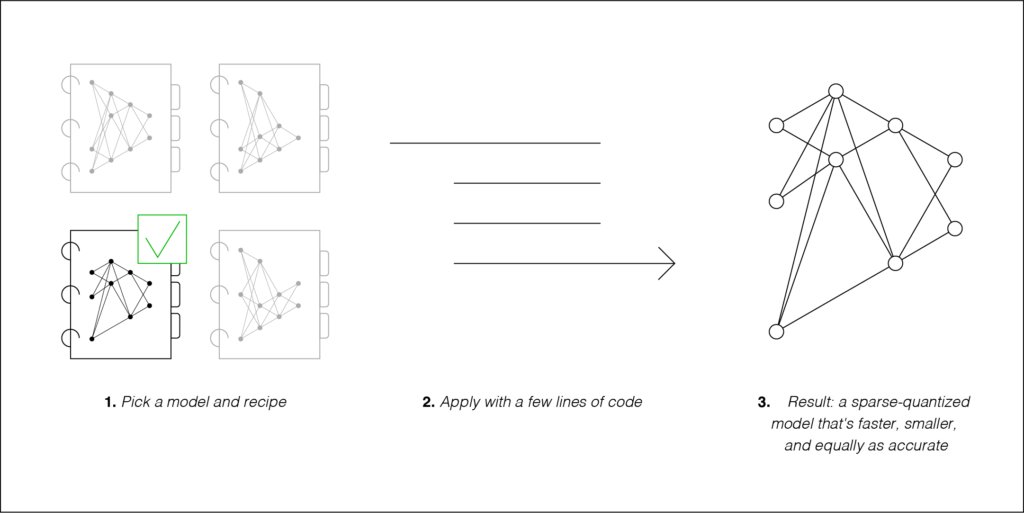

You can implement GMP from scratch, or you can try pruning your model with SparseML.

SparseML uses GMP and a host of other state-of-the-art pruning and quantization techniques to compress the model without loss of accuracy.

Check this out 👇🏻

github.com/neuralmagic/sparseml

SparseML uses GMP and a host of other state-of-the-art pruning and quantization techniques to compress the model without loss of accuracy.

Check this out 👇🏻

github.com/neuralmagic/sparseml

Follow @themwiti for more content on machine learning and deep learning.

Follow @themwiti for more ML and deep learning content.

Mentions

See All

Akshay 🚀 @akshay_pachaar

·

Mar 9, 2023

This is one fine thread!