Thread

Training NLP and CV models from scratch is a waste of resources.

Instead, apply transfer learning using pre-trained models.

Here's how transfer learning works in 6 steps.

--A Thread--

🧵

Instead, apply transfer learning using pre-trained models.

Here's how transfer learning works in 6 steps.

--A Thread--

🧵

Step 1: Obtain the pre-trained model

You can obtain pre-trained models from various places, such as:

• TensorFlow Hub

• Kaggle models

• @neuralmagic's SparseZoo

• Hugging Face

• Keras applications

• PyTorch Hub

You can obtain pre-trained models from various places, such as:

• TensorFlow Hub

• Kaggle models

• @neuralmagic's SparseZoo

• Hugging Face

• Keras applications

• PyTorch Hub

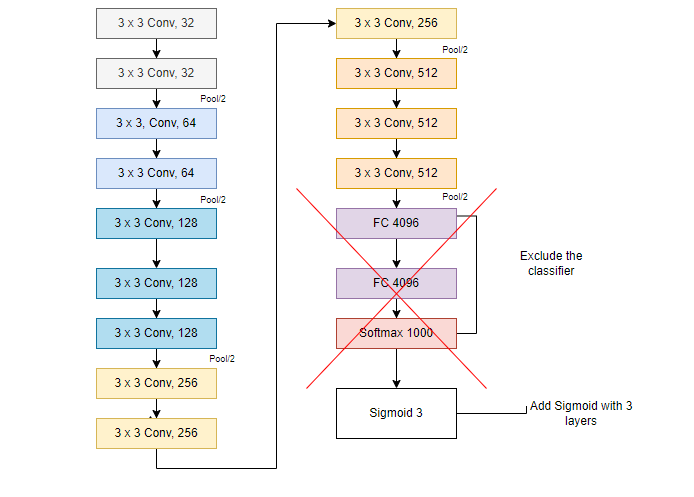

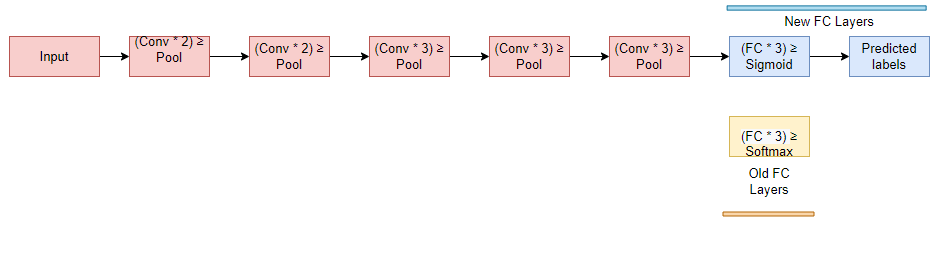

@neuralmagic Step 2: Create a base model

Defined a base model using the pre-trained weights. If you don't initialize the weights, you'll be training the model from scratch.

Drop the output layer of the model so that you can define one that's custom to your problem.

Defined a base model using the pre-trained weights. If you don't initialize the weights, you'll be training the model from scratch.

Drop the output layer of the model so that you can define one that's custom to your problem.

@neuralmagic Step 3: Freeze layers, so they don’t change during training

Set base_model.trainable = False to make sure that the weights are not re-initialized to prevent losing all the learned information.

Set base_model.trainable = False to make sure that the weights are not re-initialized to prevent losing all the learned information.

@neuralmagic Step 4: Add new trainable layers

Add new trainable layers on top of the frozen ones.

Particularly the final dense layer that was removed earlier.

Add new trainable layers on top of the frozen ones.

Particularly the final dense layer that was removed earlier.

@neuralmagic Step 5: Train the new layers on the dataset

Train the model on your new dataset. Only the new layers will be trained since you have frozen the weights of the pre-trained model.

This should give good results.

Train the model on your new dataset. Only the new layers will be trained since you have frozen the weights of the pre-trained model.

This should give good results.

@neuralmagic Step 6: Improve the model via fine-tuning

You can improve the model's performance further by unfreezing the base model and retraining the entire model on a low learning rate.

The low learning rate ensures that the model doesn't overfit.

You can improve the model's performance further by unfreezing the base model and retraining the entire model on a low learning rate.

The low learning rate ensures that the model doesn't overfit.

@neuralmagic If you enjoyed this guide on transfer learning, you might also love the complete guide that has complete NLP and CV examples on my blog.

www.machinelearningnuggets.com/transfer-learning-guide

www.machinelearningnuggets.com/transfer-learning-guide

Follow @themwiti for more threads on machine learning and deep learning.

Mentions

See All

Sumanth @Sumanth_077

·

Mar 8, 2023

Great Thread Derrick.