Thread

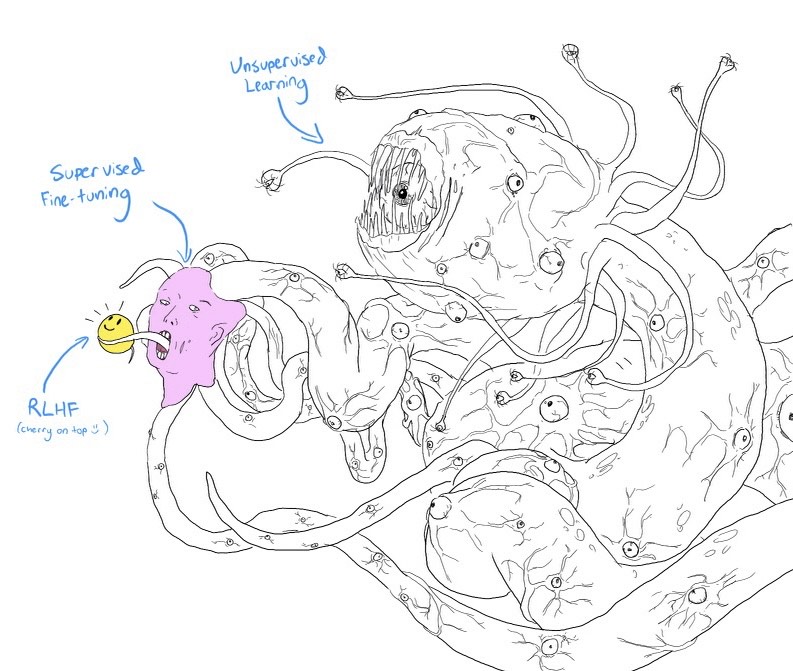

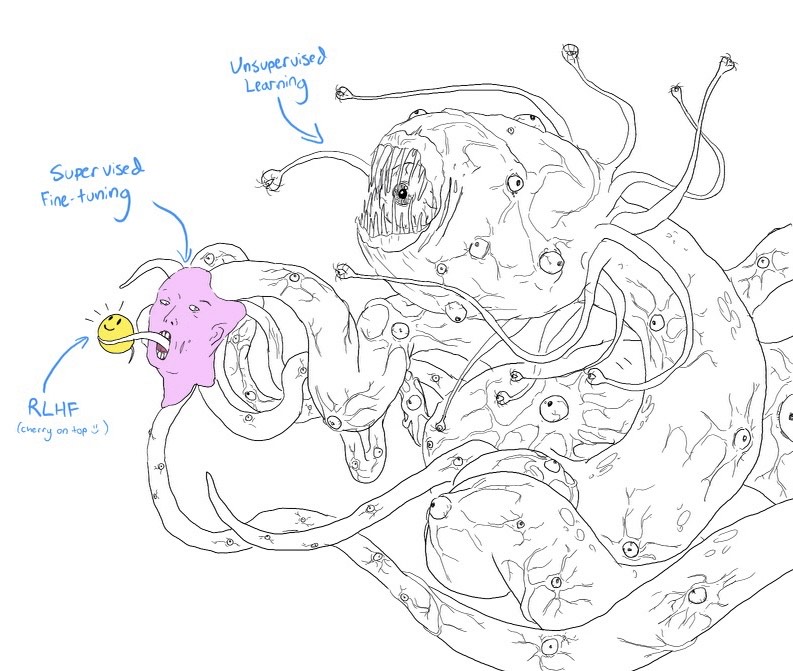

If you spend much time on AI twitter, you might have seen this tentacle monster hanging around. But what is it, and what does it have to do with ChatGPT?

It's kind of a long story. But it's worth it! It even ends with cake 🍰

THREAD:

It's kind of a long story. But it's worth it! It even ends with cake 🍰

THREAD:

First, some basics of how language models like ChatGPT work:

Basically, the way you train a language model is by giving it insane quantities of text data and asking it over and over to predict what word[1] comes next after a given passage.

Eventually, it gets very good at this.

Basically, the way you train a language model is by giving it insane quantities of text data and asking it over and over to predict what word[1] comes next after a given passage.

Eventually, it gets very good at this.

This training is a type of ✨unsupervised learning✨[2]

It's called that because the data (mountains of text scraped from the internet/books/etc) is just raw information—it hasn't been structured and labeled into nice input-output pairs (like, say, a database of images+labels).

It's called that because the data (mountains of text scraped from the internet/books/etc) is just raw information—it hasn't been structured and labeled into nice input-output pairs (like, say, a database of images+labels).

But it turns out models trained that way, by themselves, aren't all that useful.

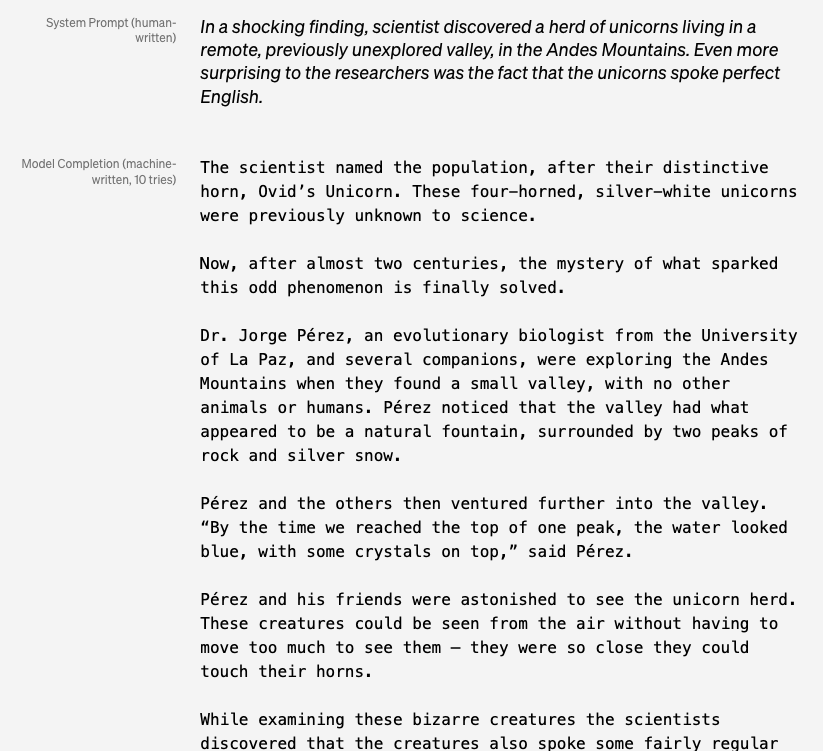

They can do some cool stuff, like generating a news article to match a lede. But they often find ways to generate plausible-seeming text completions that really weren't what you were going for.[3]

They can do some cool stuff, like generating a news article to match a lede. But they often find ways to generate plausible-seeming text completions that really weren't what you were going for.[3]

So researchers figured out some ways to make them work better.

One basic trick is "fine-tuning": You partially retrain the model using data specifically for the task you care about.

One basic trick is "fine-tuning": You partially retrain the model using data specifically for the task you care about.

If you're training a customer service bot, for instance, then maybe you pay some human customer service agents to look at real customer questions and write examples of good responses.

Then you use that nice clean dataset of question-response pairs to tweak the model.

Then you use that nice clean dataset of question-response pairs to tweak the model.

Unlike the original training, this approach is "supervised" because the data you're using is structured as well-labeled input-output pairs.

So you could also call it ✨supervised fine-tuning✨

So you could also call it ✨supervised fine-tuning✨

Another trick is called "reinforcement learning from human feedback," or RLHF.

The way reinforcement learning *usually* works is that you tell an AI model to maximize some kind of score—like points in a video game—then let it figure out how to do that.

RLHF is a bit trickier:

The way reinforcement learning *usually* works is that you tell an AI model to maximize some kind of score—like points in a video game—then let it figure out how to do that.

RLHF is a bit trickier:

How it works, very roughly, is that you give the model some prompts, let it generate a few possible completions, then ask a human to rank how good the different completions are.

Then, you get your language model to try to learn how to predict the human's rankings...

Then, you get your language model to try to learn how to predict the human's rankings...

And then you do reinforcement learning on *that*, so the AI is trying to maximize how much the humans will like text it generates, based on what it learned about what humans like.

So that's ✨RLHF✨

And now we can go back to the tentacle monster!

So that's ✨RLHF✨

And now we can go back to the tentacle monster!

Now we know what all the words mean, the picture should make more sense. The idea is that even if we can build tools (like ChatGPT) that look helpful and friendly on the surface, that doesn't mean the system as a whole is like that. Instead...

...Maybe the bulk of what's going on is an inhuman Lovecraftian process that's totally alien to how we think about the world, even if it can present a nice face.

(Note that it's not about the tentacle monster being evil or conscious—just that it could be very, very weird.)

(Note that it's not about the tentacle monster being evil or conscious—just that it could be very, very weird.)

"But wait," I hear you say, "You promised cake!"

You're right, I did. And here's why—because the tentacle monster is *also* a play on a very famous slide by a very famous researcher.

You're right, I did. And here's why—because the tentacle monster is *also* a play on a very famous slide by a very famous researcher.

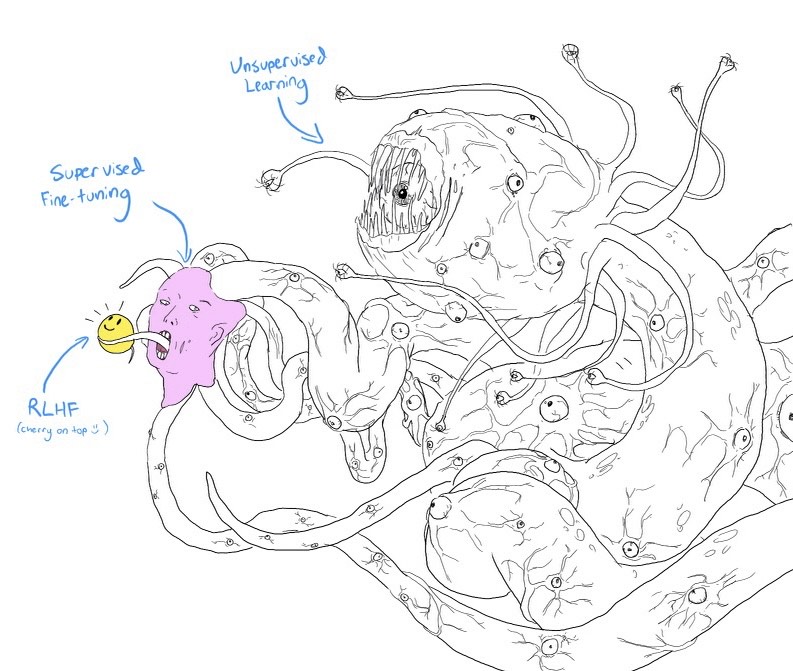

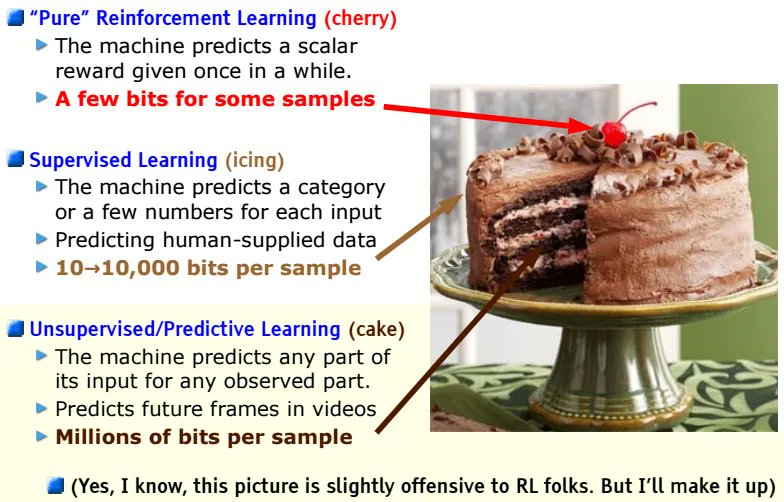

Back in 2016, Yann LeCun (Chief AI Scientist at FB) presented this slide at NeurIPS, one of the biggest AI research conferences.

Back then, there was a lot of excitement about RL as the key to intelligence, so LeCun was making a totally different point...

Back then, there was a lot of excitement about RL as the key to intelligence, so LeCun was making a totally different point...

...Namely, that RL was only the "cherry on top," whereas unsupervised learning was the bulk of how intelligence works.

To an AI researcher, the labels on the tentacle monster immediately recall this cake, driven home by the cheery "cherry on top :)"

END THREAD

To an AI researcher, the labels on the tentacle monster immediately recall this cake, driven home by the cheery "cherry on top :)"

END THREAD

A few post-thread "well, technically"s and sources:

[1] Technically LMs predict what "token" comes next, but a token is usually roughly a word, so, close enough

[2] Technically people often say "self-supervised learning" rather than "unsupervised learning" for LM pre-training..

[1] Technically LMs predict what "token" comes next, but a token is usually roughly a word, so, close enough

[2] Technically people often say "self-supervised learning" rather than "unsupervised learning" for LM pre-training..

...because you could think of "chunk of text"+"next word" as an input-output pair that is automatically present in the dataset—but I guess the tentacle monster artist went with "unsupervised" to match the cake.

[3] Unicorn story from openai.com/research/better-language-models ...

[3] Unicorn story from openai.com/research/better-language-models ...

...and tree poem example from astralcodexten.substack.com/p/janus-simulators

[4] The tentacle monster I used here is from

; I think the original idea is from

Thanks for reading!

[4] The tentacle monster I used here is from

; I think the original idea is from

Thanks for reading!

Mentions

See All

Yann LeCun @ylecun

·

Mar 4, 2023

Nice explanation.