Thread

The optimism in robotics research is absolutely incredible these days! I believe all the pieces we need for a “modern attempt at embodied intelligence” are ready. At recent talks, I pitched a potential recipe, and I’d like to share it with you.

Let’s break down the key points 🔑

Let’s break down the key points 🔑

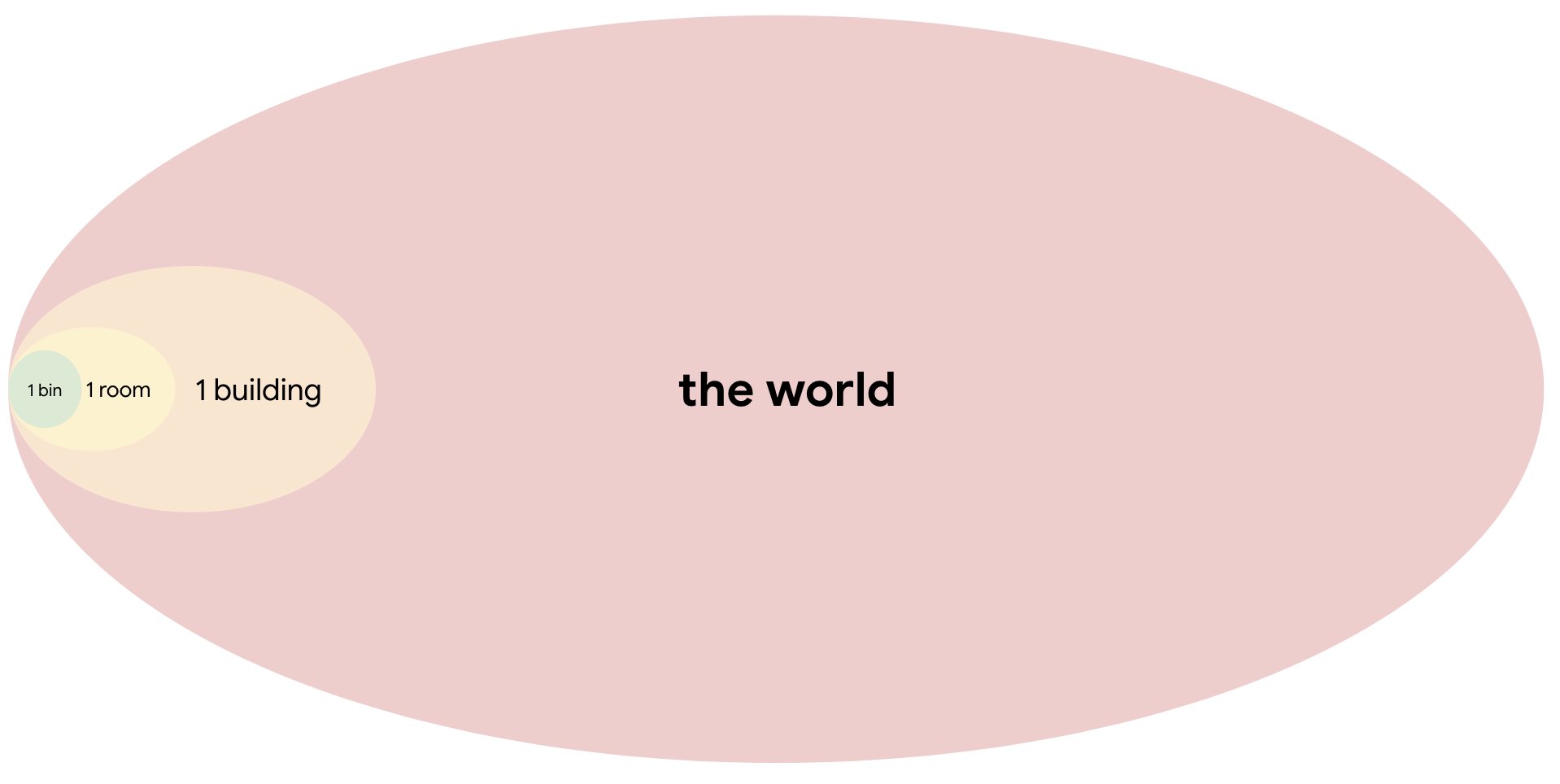

2) The first place to start might be to ask: why isn't robotics solved yet? The challenge is that even the most difficult robotics research settings are so many orders of magnitude less complex than the noise and chaos of the real world. How can we bridge this gap?

3) I propose that we’ll *have* to leverage the emergent capabilities of internet-scale models to make this huge leap from the lab to the wild world. Emergence as a phenomenon is so powerful; more is not just more, more is different.

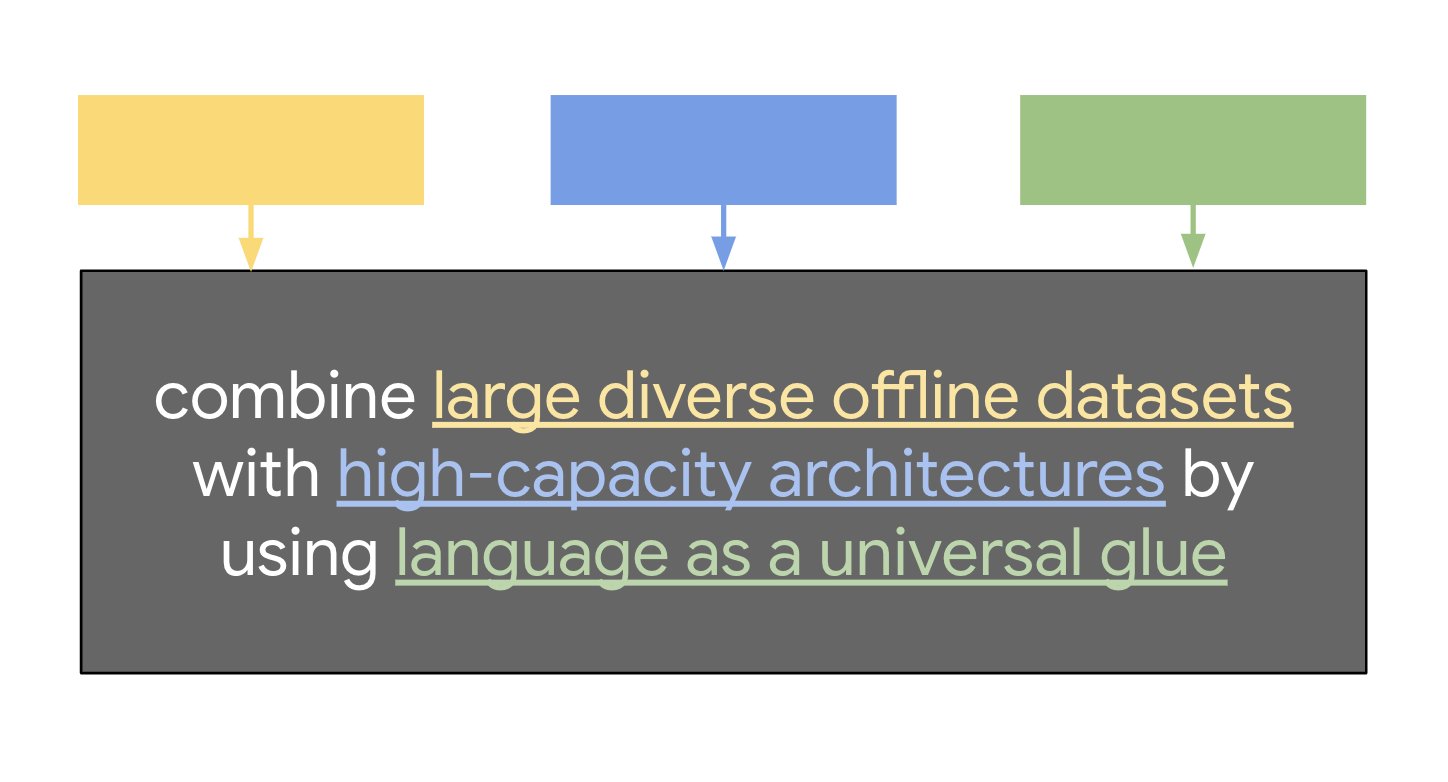

4) So, how do we build a robotics foundation model that will give us these emergent capabilities? Let’s leverage three important trends to come up with ingredients that will help us prepare a potential recipe for building a robotics foundation model.

5) Trend #1: Robotics has been moving from online methods to offline methods. This is quite a dramatic paradigm shift – robot learning was once synonymous with online reinforcement learning, but offline methods like imitation learning have been picking up steam.

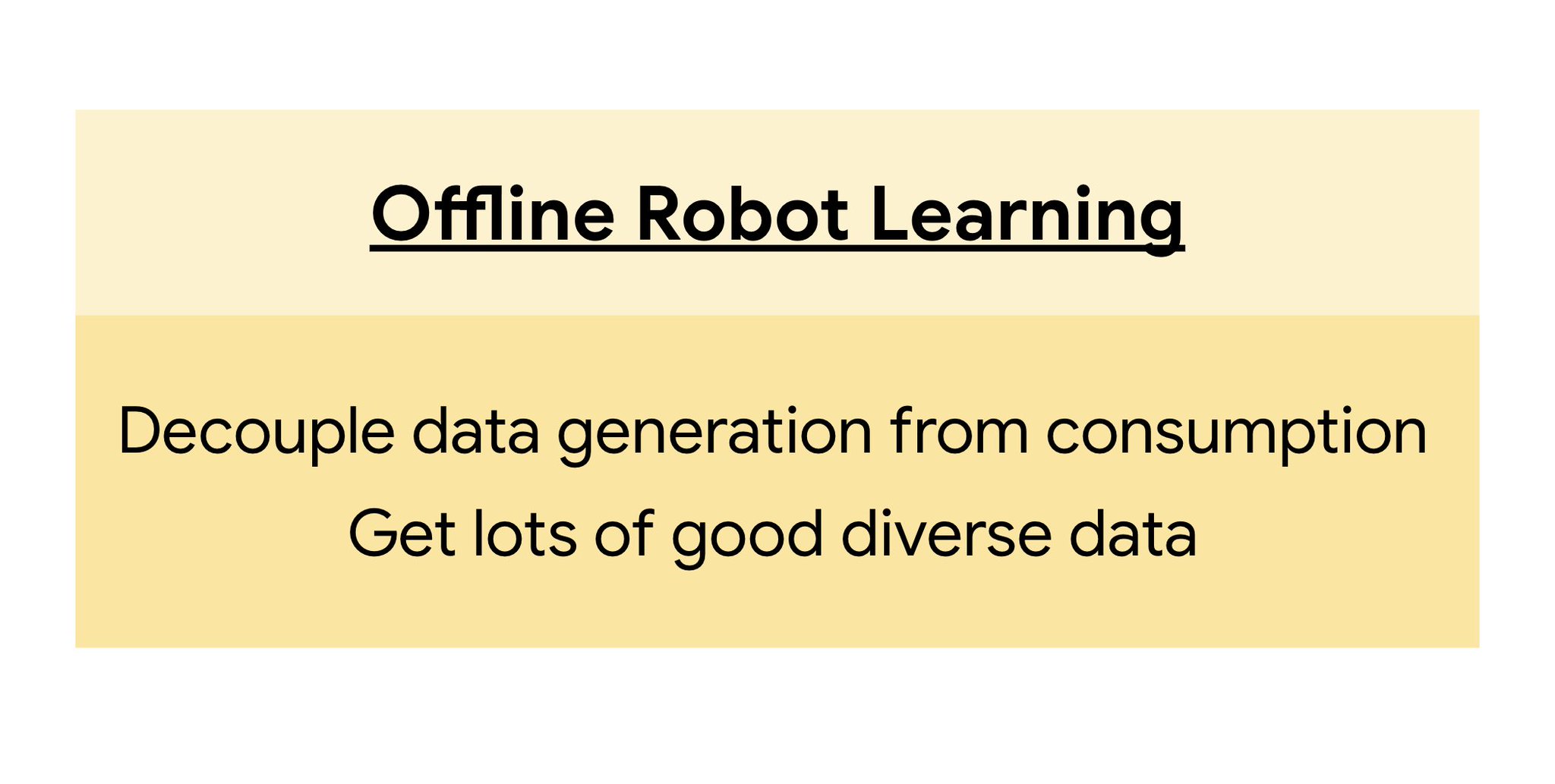

6) Ingredient #1: Let’s focus on separating the challenges of how to collect diverse robotic data and how to learn from that data. Traditionally, there has been a tight coupling between data generation and data consumption, but it seems that we can split this problem up!

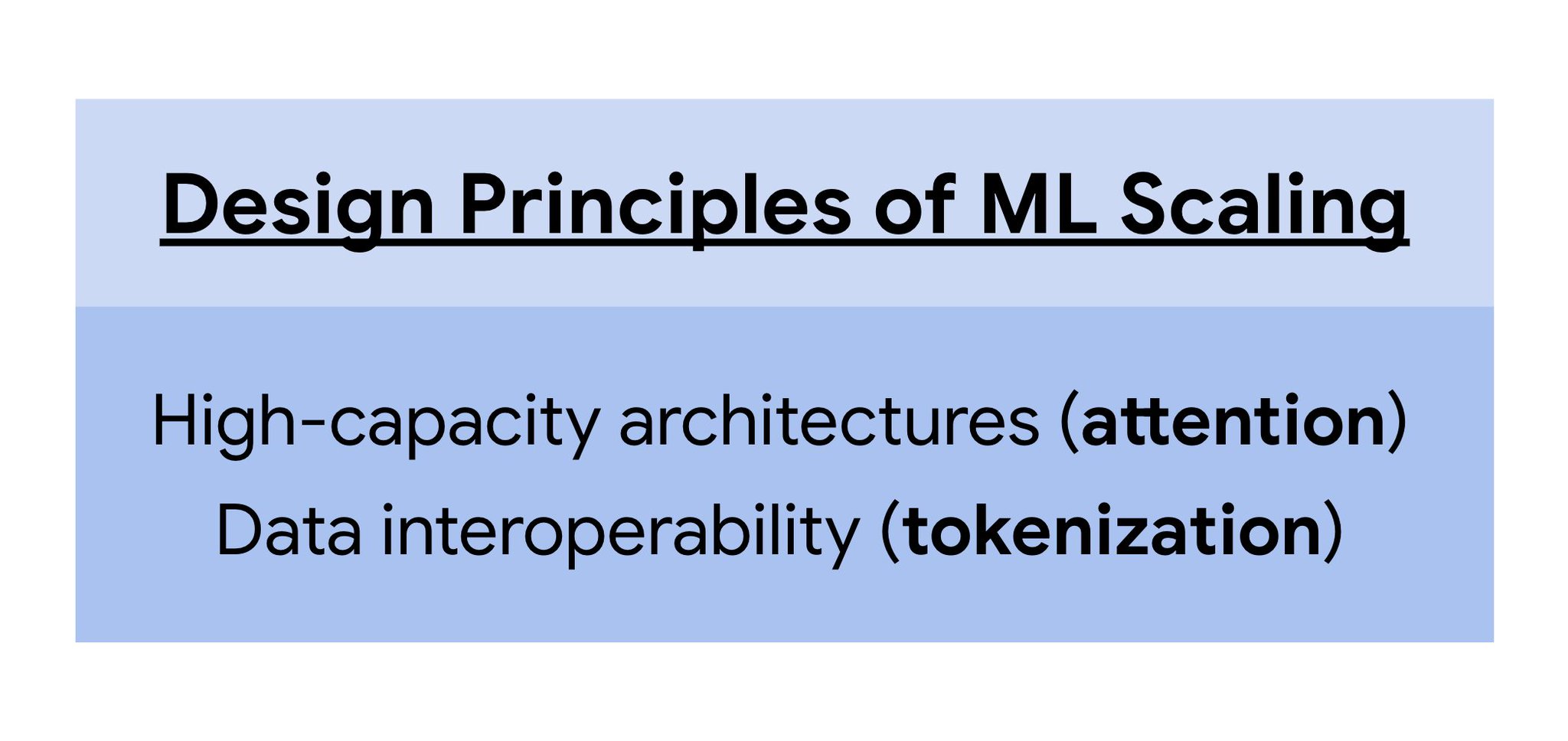

7) Trend #2: ML scaling has driven tremendous growth in AI.

Ingredient #2: Let’s stand on the shoulders of giants and use their best design principles: Transformers are general-purpose differentiable computers (@karpathy) and tokenization makes everything sequence modeling.

Ingredient #2: Let’s stand on the shoulders of giants and use their best design principles: Transformers are general-purpose differentiable computers (@karpathy) and tokenization makes everything sequence modeling.

@karpathy 8) Trend #3: Foundation models themselves have gotten better, and they’ve gotten better faster.

Ingredient #3: Leverage “Bitter Lesson 2.0” (

) and work on methods that scale with foundation models. Use language as the universal API.

Ingredient #3: Leverage “Bitter Lesson 2.0” (

) and work on methods that scale with foundation models. Use language as the universal API.

@karpathy 9) Combining the ingredients together suggests one approach for a modern attempt at embodied intelligence. These ideas have shaped a lot of my own research, and I’m excited to see how these trends evolve in the future!

10) I’ll note a few brief examples of recent work from my team and how they tie in with this recipe.

RT-1 (robotics-transformer.github.io) uses Ingredient #1 and Ingredient #2 by applying a Transformer BC policy onto a large discretized offline demonstration dataset.

RT-1 (robotics-transformer.github.io) uses Ingredient #1 and Ingredient #2 by applying a Transformer BC policy onto a large discretized offline demonstration dataset.

11) SayCan (say-can.github.io) and Inner Monologue (innermonologue.github.io) leverage Ingredient #3 to utilize LLMs for robotic planning. By expressing plans and reasoning in language we import common sense zero-shot from increasingly better LLMs.

12) DIAL (instructionaugmentation.github.io) uses Ingredient #1 and Ingredient #3 by using VLMs to perform data augmentation on language labels of offline datasets. VLMs use language to convey internet-scale semantics and concepts to existing datasets.

13) More work related to these ingredients is coming out very soon! But, I hope this initial recipe for a robotics foundation model excites you (or at the very least, intrigues you) 🙂

If this thread was informative, please Like/Retweet the first Tweet:

If this thread was informative, please Like/Retweet the first Tweet:

14) Each of these works was the culmination of huge collaborations; I feel so privileged to work w/ brilliant colleagues (too many to list) but some are:

@hausman_k @svlevine @Kanishka_Rao @JonathanTompson @Yao__Lu @brian_ichter @xf1280 @keerthanpg @YevgenChebotar @SirrahChan!

@hausman_k @svlevine @Kanishka_Rao @JonathanTompson @Yao__Lu @brian_ichter @xf1280 @keerthanpg @YevgenChebotar @SirrahChan!