Thread by Tim Kietzmann

- Tweet

- Feb 9, 2023

- #Cognitivescience #Neuroscience

Thread

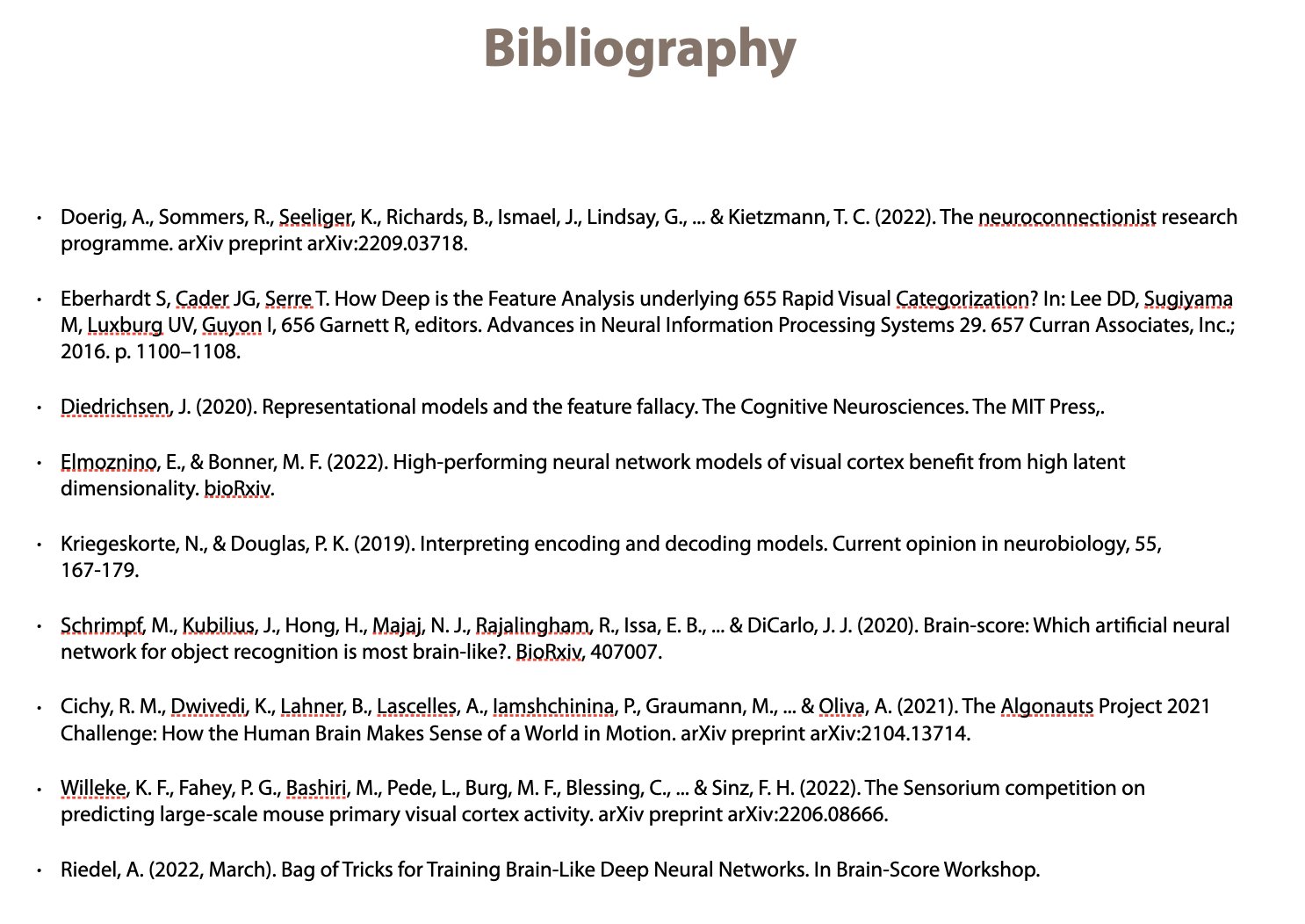

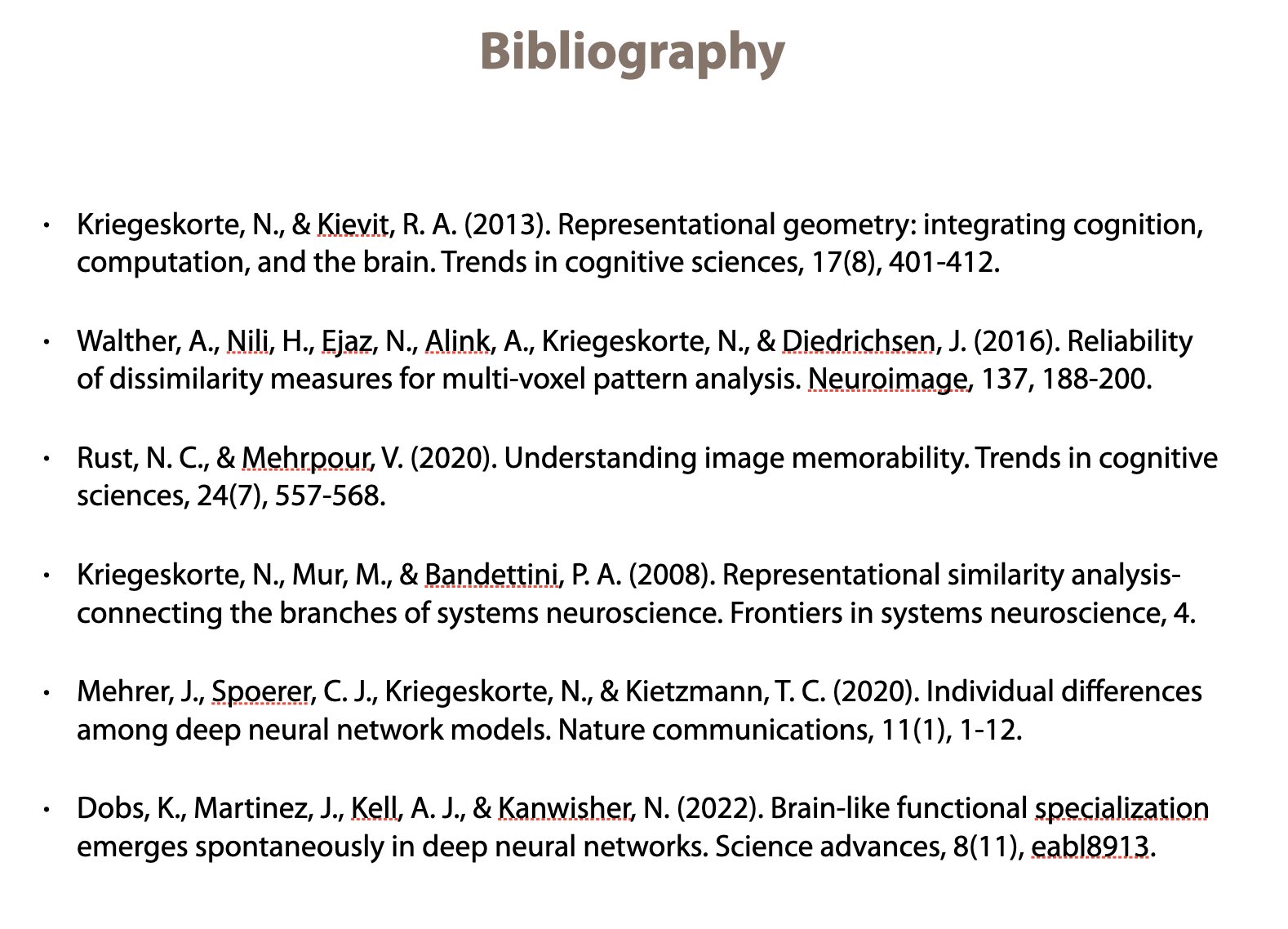

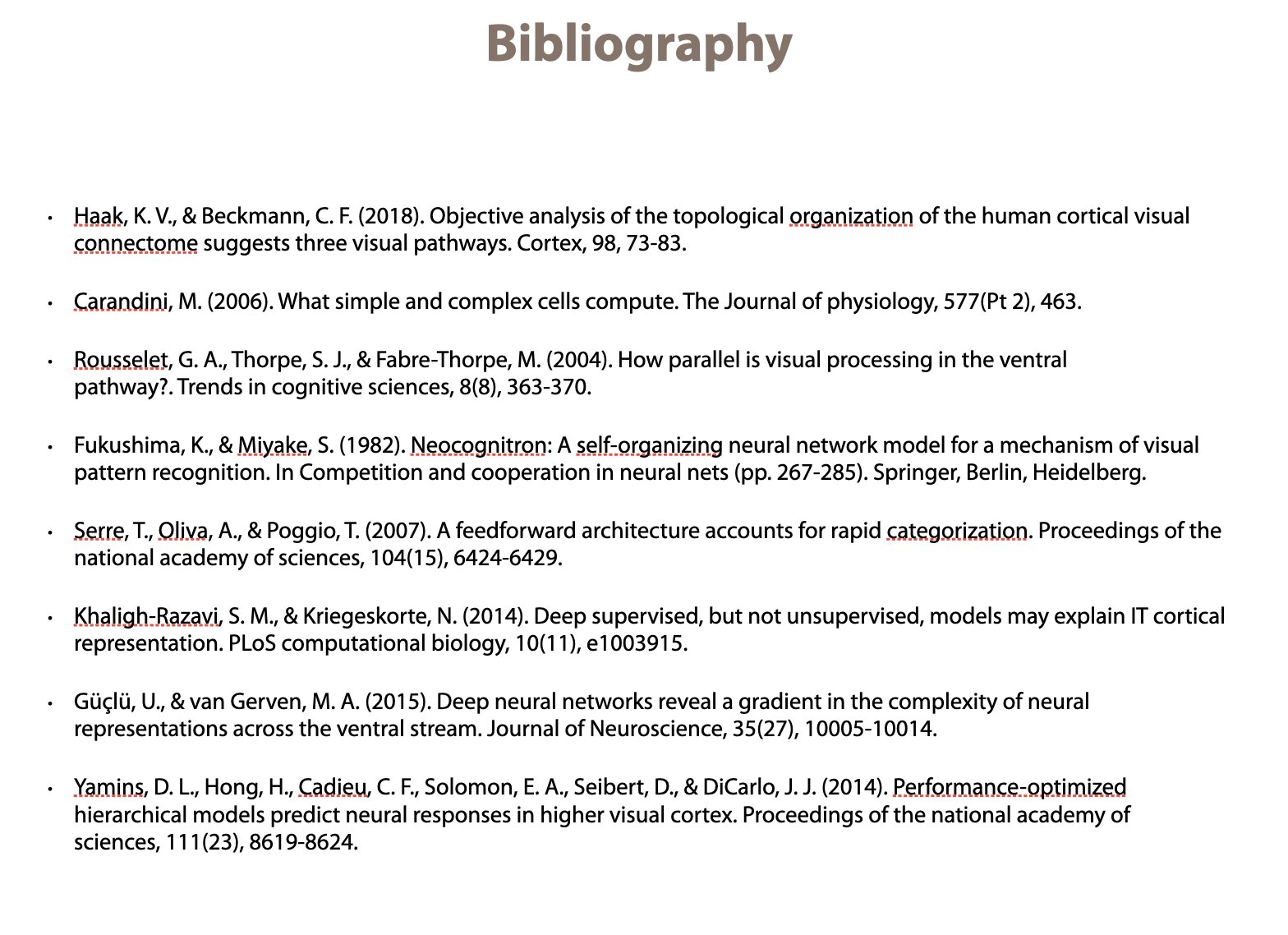

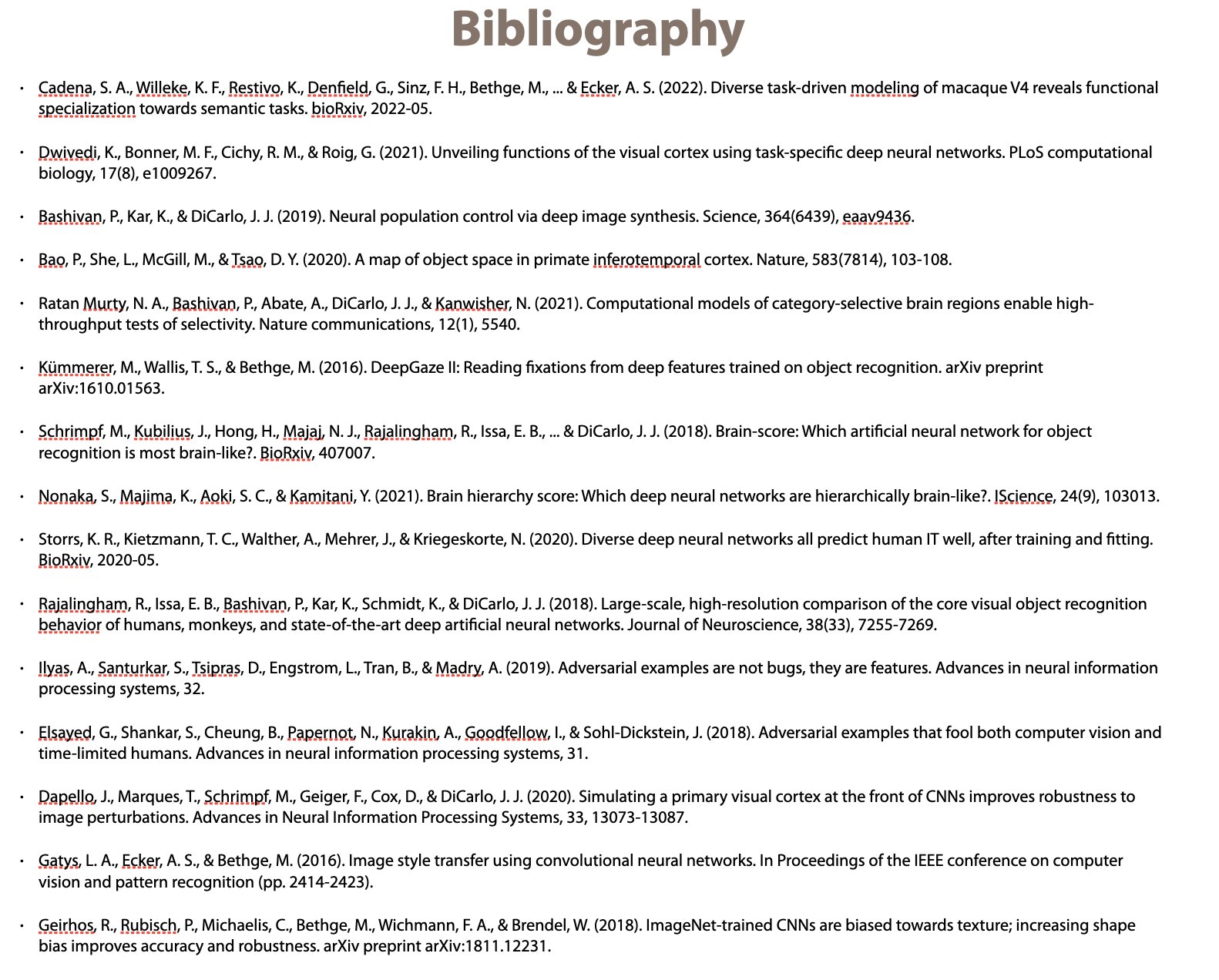

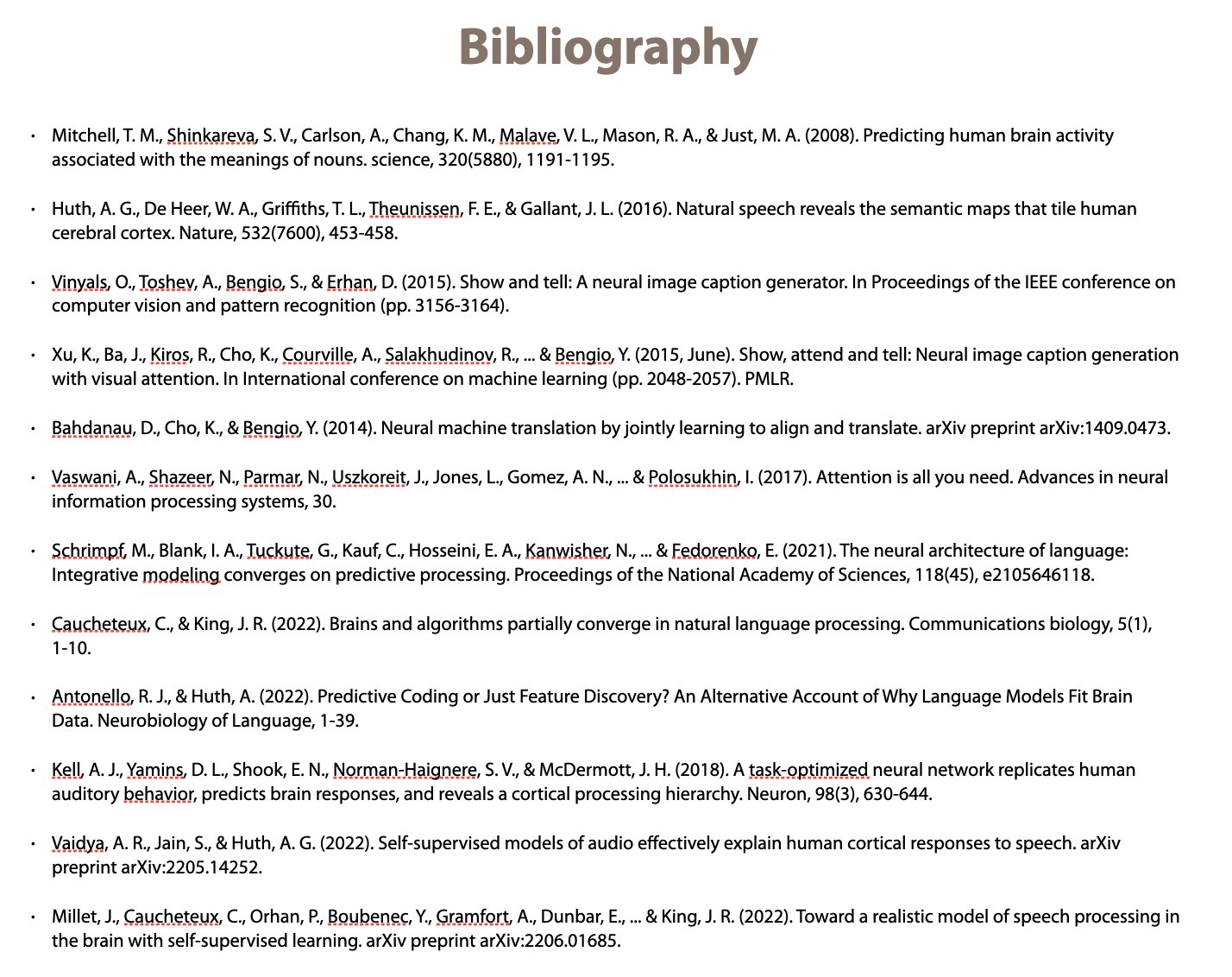

Just finished my course “Machine Learning for Cognitive Computational Neuroscience”. Across 12 lectures (90 minutes each) and 10 workgroup sessions, we covered >100 papers (46% published in the past two years). The students (and I) learned a lot.

Here is what we covered: 1/

Here is what we covered: 1/

Lecture 1: we talked about and modelling in general. Why do we need models? Why are biological and behavioural replication by themselves insufficient? We also had a first look at features of ANNs and why they are suitable models. bg reading by @KriegeskorteLab, @tyrell_turing 2/

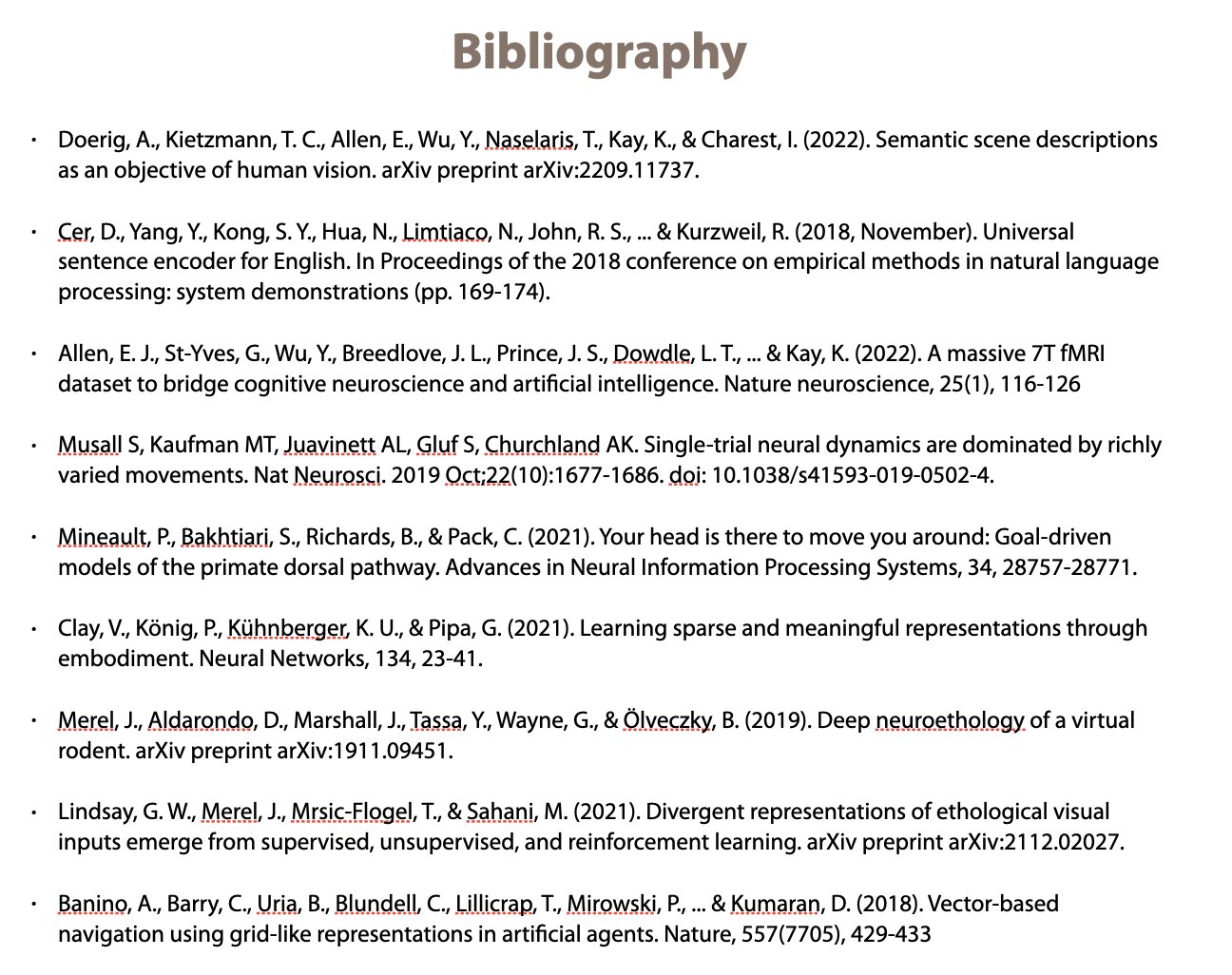

Lecture 2: the neuroconnectionist research programme (#NCRP) and the underlying research cycle of modelling, prediction, and verification. We also started looking tools: diagnostic readouts and encoding models. bg reading by @AdrienDoerig and our ORE paper 3/

In lecture 3, we took an in-depth look at RSA: The general idea, properties of various distance measures, noise ceilings, applications for brain-model comparisons and in-silico comparisons of ANN representations. Background reading by @SaxeLab and @KriegeskorteLab+@rogierK 4/

Lecture 4: normative modelling. We discussed that systems optimise their feature selectivity for their evolutionary niche. We looked at primate ventral stream selectivity, and did a history deep-dive: neocognitron, LeNet, hmax, AlexNet. bg reading by @dyamins and @anilananth 5/

Lecture 5: “glass half empty/full”. i.e. we talked about success and issues of supervised (feedforward) models. Progress in e.g. explaining variance in neural data, but also data req., shape/texture, BH-score, error patterns and adversarial attacks. bg reading by @tserre /6

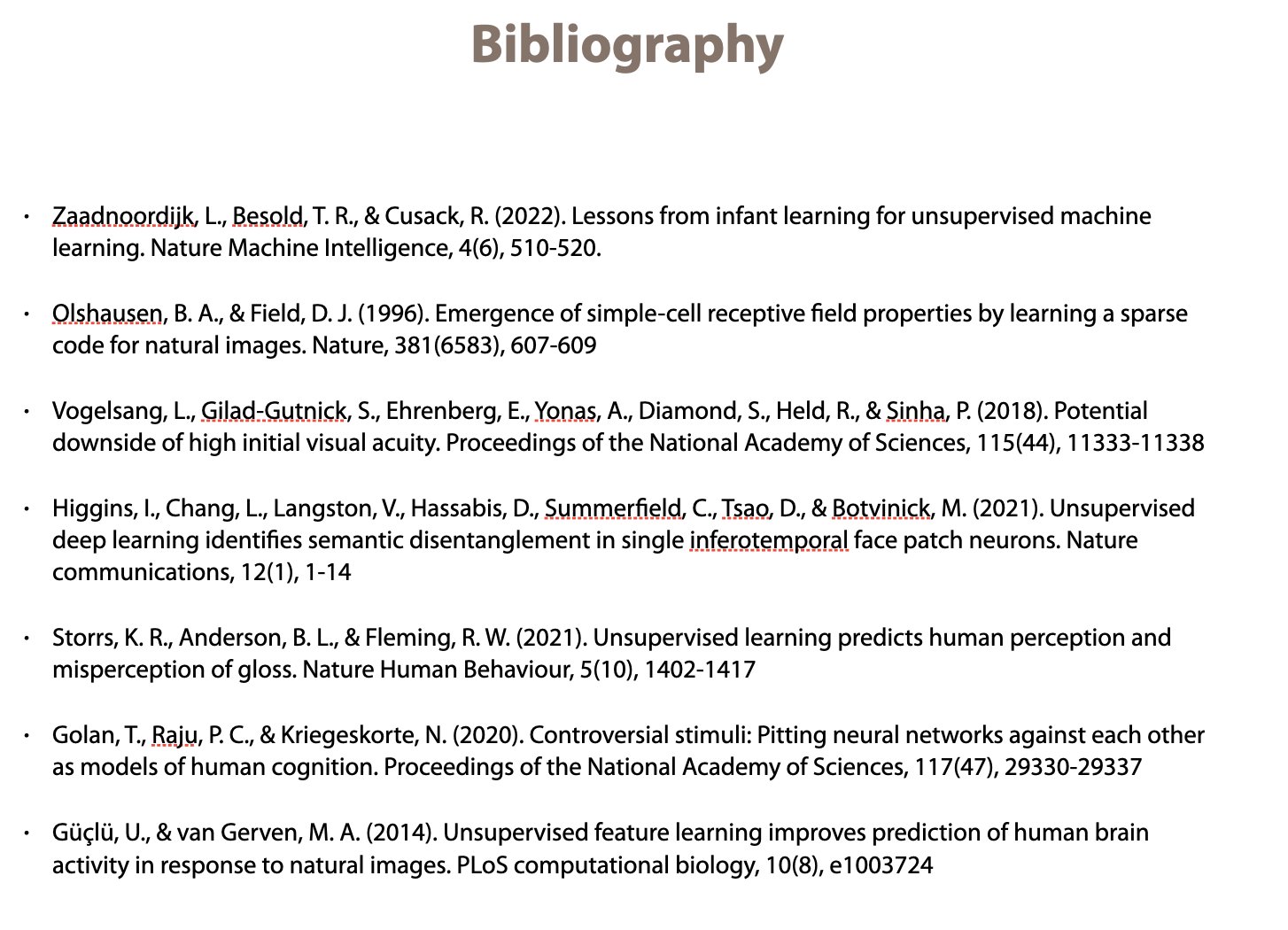

Lecture 6: lessons from cognitive development and unsupervised learning (generative models, including VAEs). VAe applications in predicting macaque face patch data, human perception of glossiness, and their role in controversial stimuli. bg reading: @LorijnSZ, Higgins /7

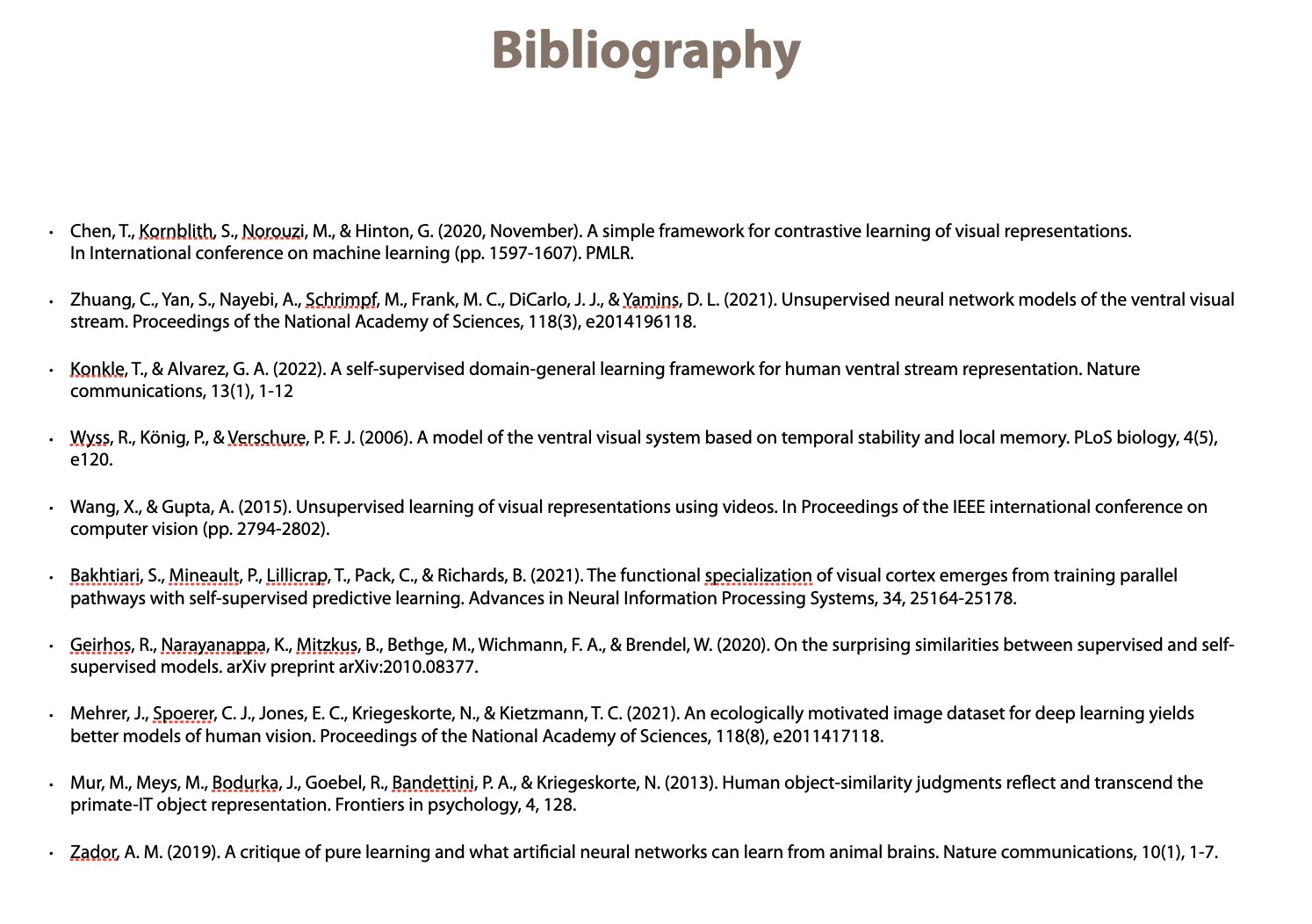

Lecture 7: self-supervised learning. SimCLR, local aggregation, CPC, and comparisons to brain data. Also input statistics (e.g. ecoset), and discussions on parametric complexity, innateness, direct-fit to nature and double descent. bg reading: @TonyZador, & @Uri_Hasson /8

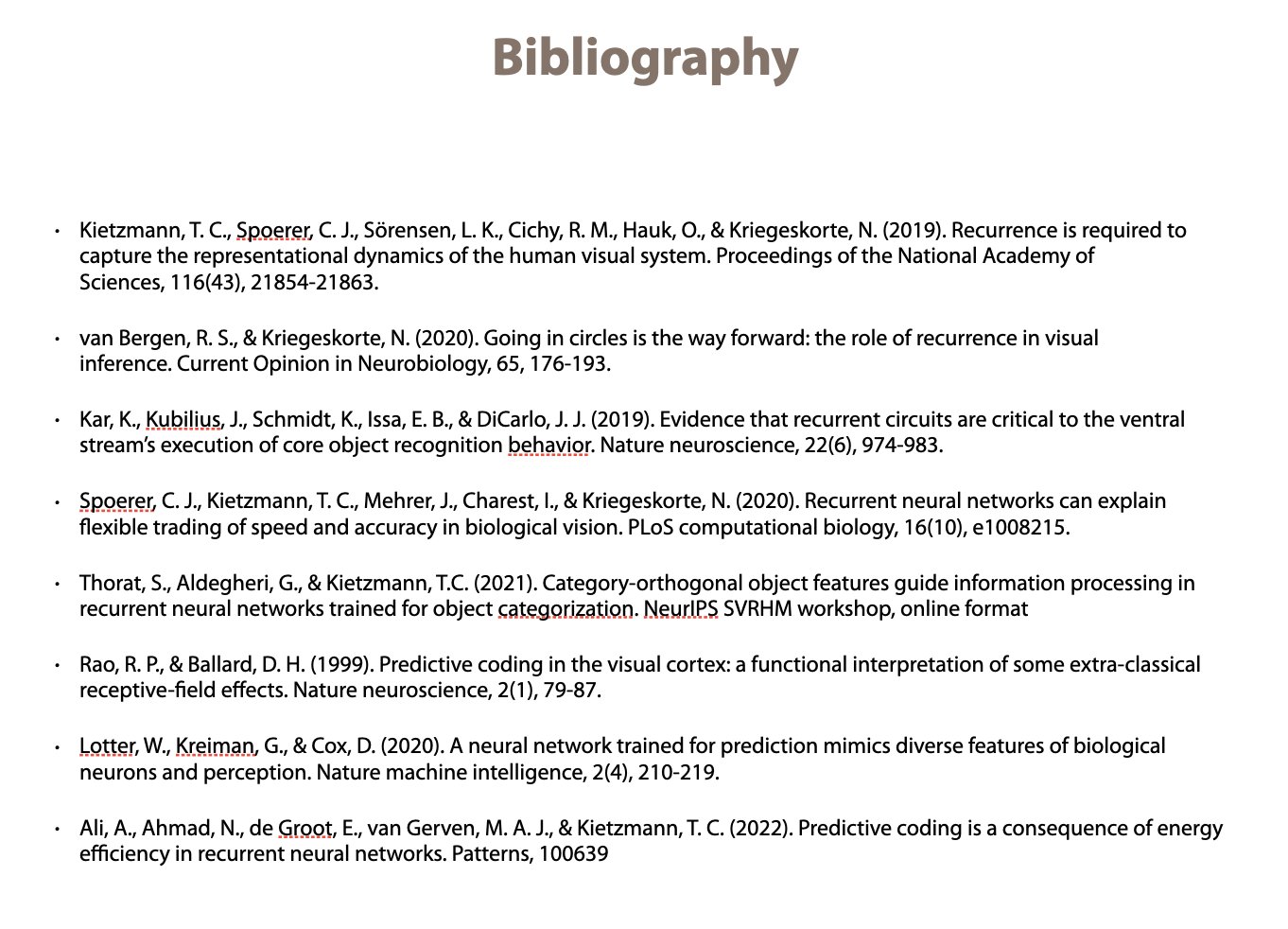

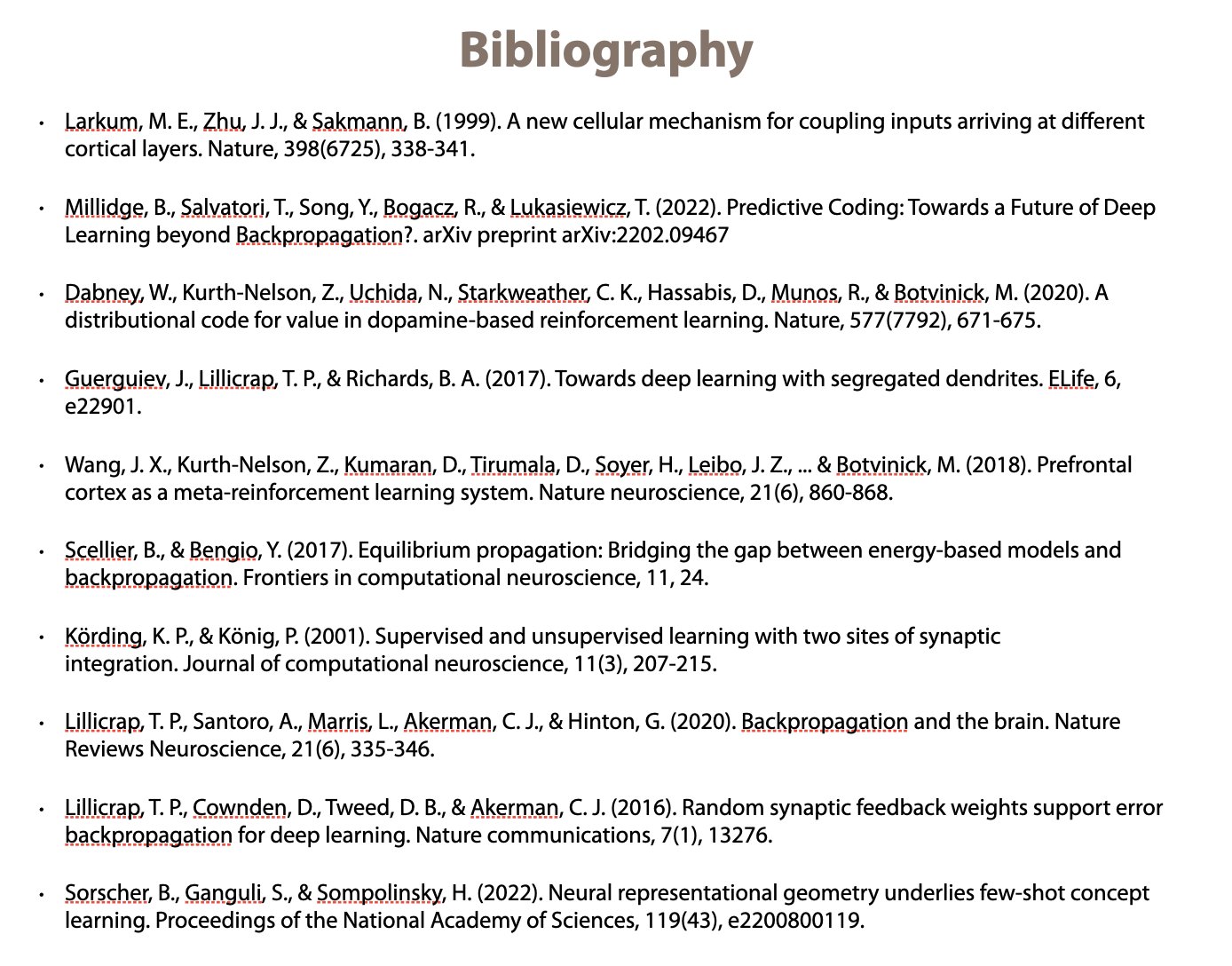

Lecture 8, recurrence. We talked about ventral stream dynamics, temporal unrolling and BPTT, and predictive coding. I included some of our own work (Spoerer et al., @martisamuser, @hellothere_ali), and great work by @KohitijKar. bg reading by @rubenvanbergen /9

Lecture 9: language modelling. Started w Mitchell et al. (2008), moved to word2vec, and on to attention and transformers. We saw how they compare against human fMRI, ECoG, and MEG data, and finished by looking at models of auditory cortex. bg reading: @martin_schrimpf /10

Lecture 10: embodiment. We talked about non-instructed movements, as well as reinforcement learning agents (obstacle tower challenge, virtual rodents) and the (sparse) representations that emerge. Finished by looking at the emergence of grid-cells. bg reading: @AndreaBanino /11

Lecture 11: elephant in the room: is backpropagation biologically plausible? We discussed the problems and ways in which biology could solve them. We then looked at ways to learn from fewer data: few-shot learning, and meta-learning. bg reading: @janexwang and @countzerozzz /12

Lecture 12: general summary of all lecture topics on a meta-level to connect them. Also: winners of our meme-contest (but more on that later).

I am very excited about this emerging field and hope that some of my excitement will have inspired the students along the way! /fin

I am very excited about this emerging field and hope that some of my excitement will have inspired the students along the way! /fin

Important addendum: these stellar TAs were absolutely essential to the course: Sabine Scholle, Victoria Bosch (@__init_self), Philip Sulewski (@PhilipSulewski), Mathis Pink @MathisPink, and Natalia Scharfenberg. I could not be happier with your performance.