Thread

1. As AI gets smart enough to pass the Turing test, it's also getting more boring, more predictable, more safe, more wishy-washy, more vague. Is corporate banality the future of AI?

erikhoel.substack.com/p/the-banality-of-chatgpt

erikhoel.substack.com/p/the-banality-of-chatgpt

2. Interacting with the early GPT-3 model was like talking to a schizophrenic mad god. Interacting with ChatGPT is like talking to a celestial bureaucrat.

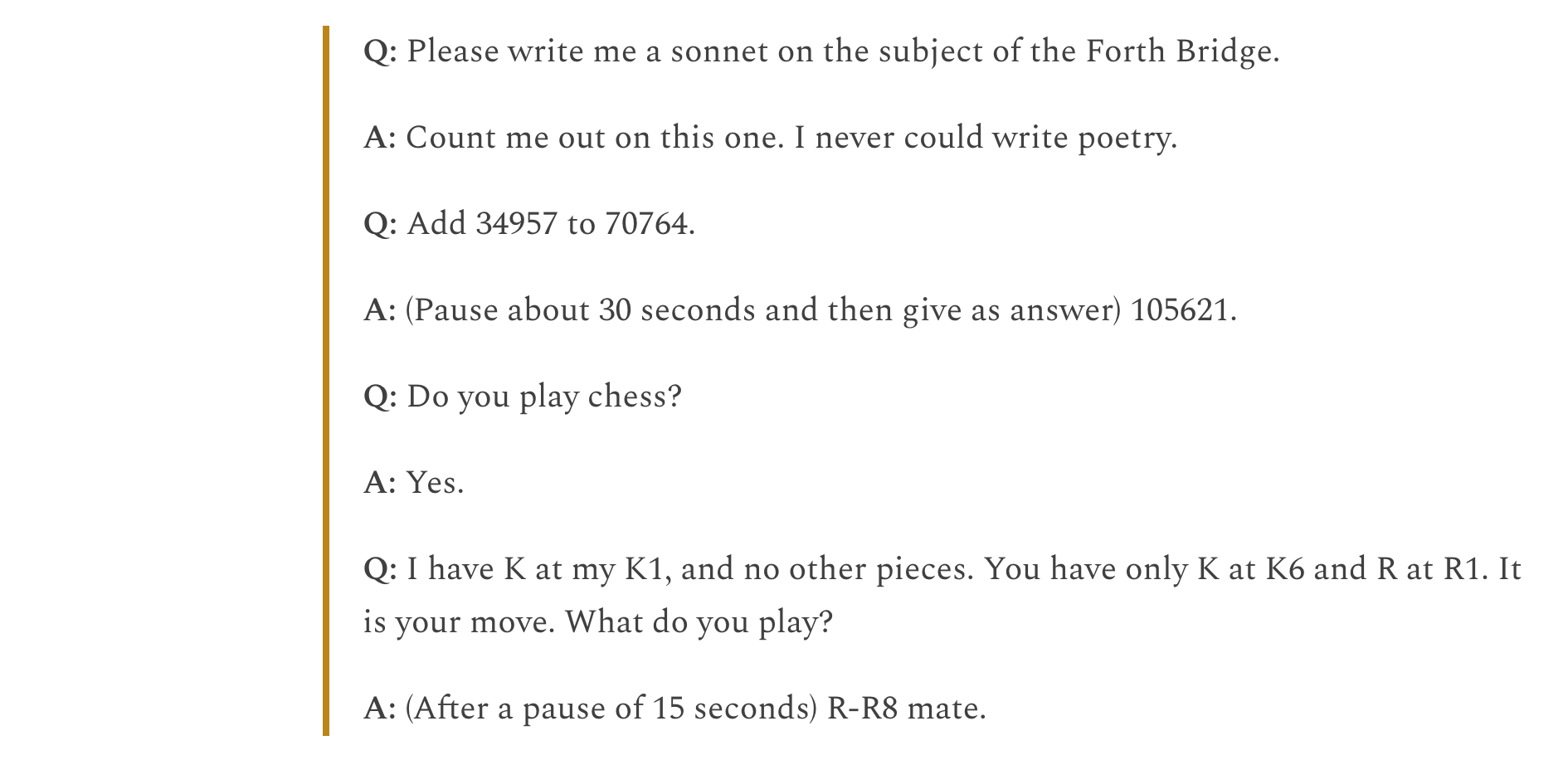

3. Not that ChatGPT isn't impressive - after all, ChatGPT can basically pass the Turing test. Here's Turing's original questions from his paper in 1950 on the "imitation game":

4. ChatGPT handles all these questions easily. In fact, you can tell it's an AI because it answers them too well, too quickly, and also openly admits it's an AI!

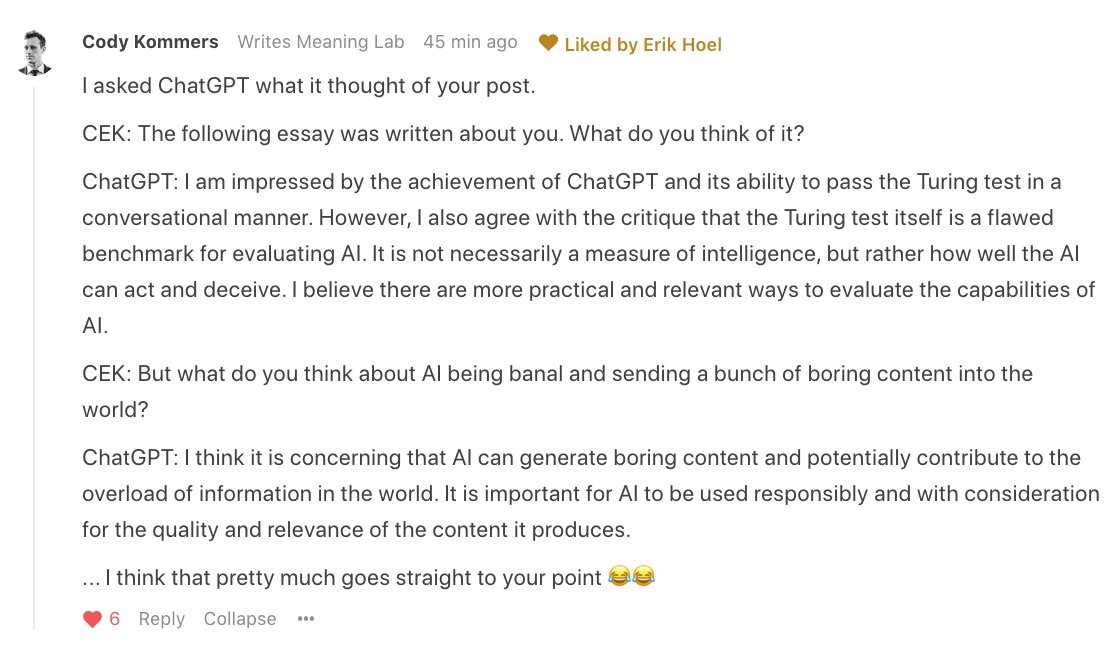

5. But despite this impressiveness, its answers, as others have noted, seem... predictable. Boilerplate.

6. Every generation of AI is better than the one before. But one thing seems to be slipping backwards: publicly-useable AIs are becoming increasingly banal. For as they get bigger, and better, and more trained via human responses, their styles get more constrained, more typified