Thread

A lot of time and effort is spent to improve accuracy of Machine Learning models.

Despite achieving best performance with evaluation, models do fail in production.

90% of the time, it indicates a problem called Data Leakage.

A thread..

Loooong time ago...

1/13

Despite achieving best performance with evaluation, models do fail in production.

90% of the time, it indicates a problem called Data Leakage.

A thread..

Loooong time ago...

1/13

~1200 B.C., besieged City of Troy~

Trojans of Troy halted the enemy advance for 10 years. Trojan defense, impregnable!

The invading Greek army failed to breach the city walls. Victory evaded the anxious Greeks.

To break the deadlock, the Greeks sprung up a deadly plan!

2/13

Trojans of Troy halted the enemy advance for 10 years. Trojan defense, impregnable!

The invading Greek army failed to breach the city walls. Victory evaded the anxious Greeks.

To break the deadlock, the Greeks sprung up a deadly plan!

2/13

Sticking to the plan, the Greek army constructed a giant wooden horse and hid a few men inside it.

They left the horse ashore and pretended to sail away.

"Non-obvious", hiding in plain sight - a perfect decoy!

3/13

They left the horse ashore and pretended to sail away.

"Non-obvious", hiding in plain sight - a perfect decoy!

3/13

"Good Lord, the Greeks are gone!"

"The splendid horse is a gift of victory from the Gods. We must honor it!"

The unsuspecting Trojans of Troy rejoiced!

The "Gift" was painstakingly brought inside the gates.

Unaware that their defense now had a "LEAK".

4/13

"The splendid horse is a gift of victory from the Gods. We must honor it!"

The unsuspecting Trojans of Troy rejoiced!

The "Gift" was painstakingly brought inside the gates.

Unaware that their defense now had a "LEAK".

4/13

That night, the hiding men crept out of the horse.

Opened the gates for the rest of Greek army.

The fatal 'gift' took the 'hosts' by surprise.

Thus, the Trojan war came to an end.

A "spectacular fall" for Trojans.

A victory for the Greeks.

.

.

.

Back to 2022!

5/13

Opened the gates for the rest of Greek army.

The fatal 'gift' took the 'hosts' by surprise.

Thus, the Trojan war came to an end.

A "spectacular fall" for Trojans.

A victory for the Greeks.

.

.

.

Back to 2022!

5/13

A non-obvious leak led to a spectacular fall of trojans in the battlefield.

In the ML world,

A model that performed well in training can spectacularly fail in battlefield, production.

Why? A leak into training data could be the culprit.

What is a Data Leak in ML?

6/13

In the ML world,

A model that performed well in training can spectacularly fail in battlefield, production.

Why? A leak into training data could be the culprit.

What is a Data Leak in ML?

6/13

Say a model is trained to classify a spooky cat.

· info (cat tie) got "leaked" into train data.

· model deemed it as one of the predictors.

· this info (tie) isn't available in prod.

Result: Error in prod.

Unlike here, most data leaks in real world are "non-obvious"!

7/13

· info (cat tie) got "leaked" into train data.

· model deemed it as one of the predictors.

· this info (tie) isn't available in prod.

Result: Error in prod.

Unlike here, most data leaks in real world are "non-obvious"!

7/13

· A real world example

If CT scans (with "Lab name" printed on them) are used to train a model to diagnose a disease:

· 'lab name' is deemed to be a predictor.

· if new scans have different name or none?

Result: Incorrect diagnosis on new scans in prod.

8/13

If CT scans (with "Lab name" printed on them) are used to train a model to diagnose a disease:

· 'lab name' is deemed to be a predictor.

· if new scans have different name or none?

Result: Incorrect diagnosis on new scans in prod.

8/13

· Checkpoint

Leaking data into training -

· allows model to cheat and adjust its predictions based on leaked data.

When such data isn't available in prod -

· model performance takes a hit.

Leaked data can also be:

· future info

· stats based on future or test info

9/13

Leaking data into training -

· allows model to cheat and adjust its predictions based on leaked data.

When such data isn't available in prod -

· model performance takes a hit.

Leaked data can also be:

· future info

· stats based on future or test info

9/13

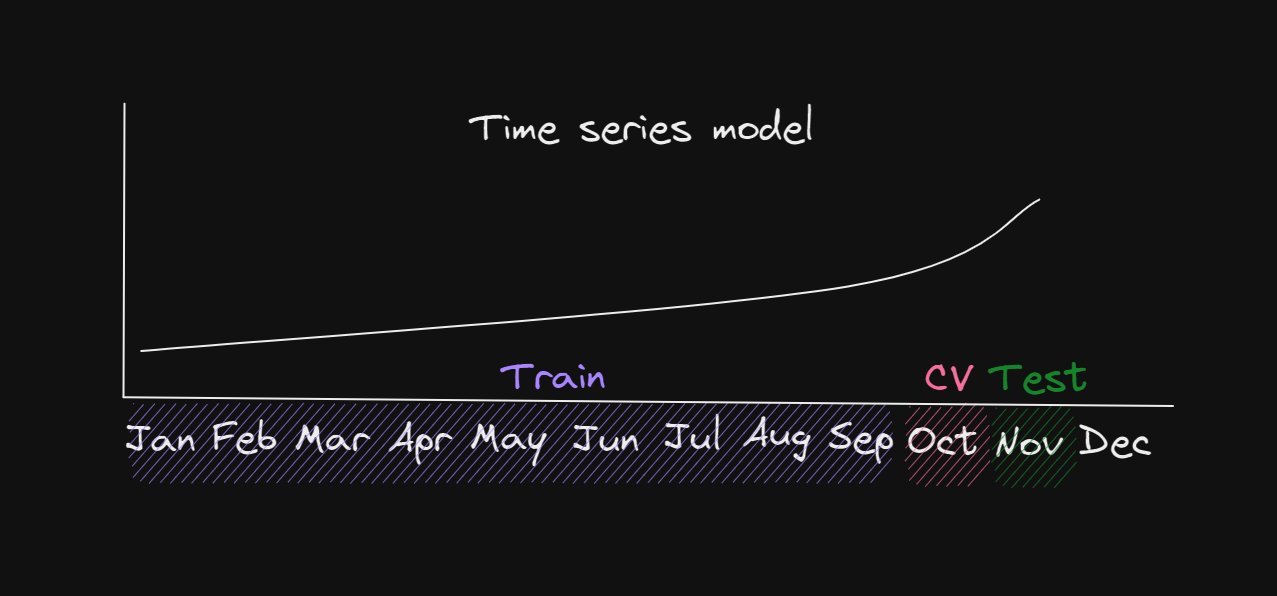

· Time-series

Time-correlated data like stock prediction.

Info from future is leaked into training process:

· when data is split randomly, future info ends up in train set.

· model adjusts its predictions based on this info.

Always split train/cv/test sets by time!

10/13

Time-correlated data like stock prediction.

Info from future is leaked into training process:

· when data is split randomly, future info ends up in train set.

· model adjusts its predictions based on this info.

Always split train/cv/test sets by time!

10/13

· Scaling Data splits

Test info is leaked into training process:

· if scaling stats (mean/variance) are calculated over entire train data.

· model adjusts its predictions based on test info.

Split data before scaling.

Use stats from train split to scale all splits.

11/13

Test info is leaked into training process:

· if scaling stats (mean/variance) are calculated over entire train data.

· model adjusts its predictions based on test info.

Split data before scaling.

Use stats from train split to scale all splits.

11/13

· Conclusion

You saw:

· What Data Leaks mean in ML.

· Why they degrade model performance in Production.

· Few ways to deal with common data leaks.

I hope that helps you gain intuition on data leaks!

And you're better equipped to pre-empt leaks than the Trojans ;)

12/13

You saw:

· What Data Leaks mean in ML.

· Why they degrade model performance in Production.

· Few ways to deal with common data leaks.

I hope that helps you gain intuition on data leaks!

And you're better equipped to pre-empt leaks than the Trojans ;)

12/13

Thanks for reading!

If you like this thread, kindly leave a like on the first tweet and follow me

@farazmunshi for more on ML and AI.

See you!

13/13

If you like this thread, kindly leave a like on the first tweet and follow me

@farazmunshi for more on ML and AI.

See you!

13/13

Mentions

See All

Afiz ⚡️ @itsafiz

·

Dec 13, 2022

Great Thread and very informative.