Thread by Boris Dayma

- Tweet

- Apr 19, 2022

- #ArtificialIntelligence #Art

Thread

Finally took the time to read DALLE 2 paper.

Process:

- text to CLIP image (no need to go through CLIP text)

- CLIP image to pixels

Here are my notes and how I could apply it to dalle-mini 👇

Process:

- text to CLIP image (no need to go through CLIP text)

- CLIP image to pixels

Here are my notes and how I could apply it to dalle-mini 👇

I'm not entirely sure that you need to go through CLIP image embeddings but the authors report a greater diversity of images by doing it.

Maybe the prior does not use a conditioning scale while the decoded does.

Maybe the prior does not use a conditioning scale while the decoded does.

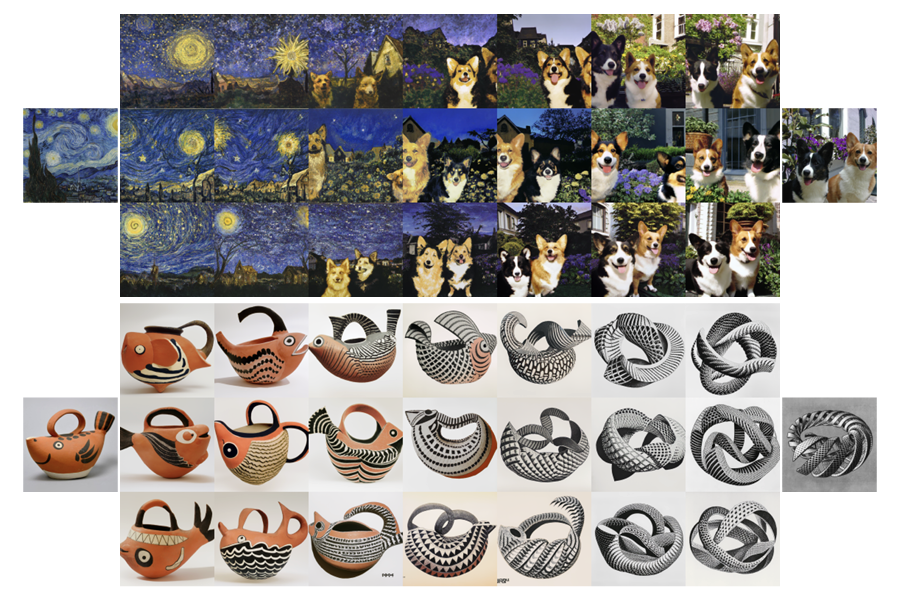

The advantage of using CLIP image embedding is for doing interpolation with another image.

I could do it the opposite (image to text embedding of my model) but not sure interpolation of text embedding is as good as image embedding.

I could do it the opposite (image to text embedding of my model) but not sure interpolation of text embedding is as good as image embedding.

I think a lot of the quality comes from the diffusion models which also modify the underlying image quite a lot but preserves main details.

That's why why you see high similarity between images from a same prompt. The prior either does inference once or gives similar outputs.

That's why why you see high similarity between images from a same prompt. The prior either does inference once or gives similar outputs.

What is nice is the upscaler uses mainly convolutions, no attention, so they can apply it to any resolution.

My concern has often been training time which they address by training on smaller crops and inference time. The prior also reportedly trains faster as diffusion than AR.

My concern has often been training time which they address by training on smaller crops and inference time. The prior also reportedly trains faster as diffusion than AR.

I understand diffusion requires less parameters but always thought it was magnitudes slower at inference. Is that right?

The issue with autoregressive models is that you can only decode 1 token at a time during inference.

The issue with autoregressive models is that you can only decode 1 token at a time during inference.

My other issue is that CLIP is not trained specifically for this problem (which is also cool though!)

Maybe training a specific diffusion auto-encoder for images + a prior to go from text to latent of that model could improve results.

Maybe training a specific diffusion auto-encoder for images + a prior to go from text to latent of that model could improve results.

There's a lot more in the paper with plenty of interesting ideas and still many parts I don't understand.

It will probably be like DALLE 1 where I discover something new each time I read the paper.

It will probably be like DALLE 1 where I discover something new each time I read the paper.

As far as how can I use it for dalle-mini, there are many things to explore:

- I could correct VQGAN errors with a diffusion upscaler model

- I could change my architecture to follow more closely this model

For now, let's try to finish replicating DALLE 1!

- I could correct VQGAN errors with a diffusion upscaler model

- I could change my architecture to follow more closely this model

For now, let's try to finish replicating DALLE 1!