Thread by Xiaojian Ma

- Tweet

- Feb 6, 2023

- #ArtificialIntelligence #ComputerProgramming

Thread

Describe, Explain, Plan and Select: Interactive Planning with Large Language Models Enables Open-World Multi-Task Agents

Can ChatGPT help with open-world game playing, like Minecraft? It sure can! 🧵👇

arxiv.org/abs/2302.01560

github.com/CraftJarvis(code will be shipped soon)

Can ChatGPT help with open-world game playing, like Minecraft? It sure can! 🧵👇

arxiv.org/abs/2302.01560

github.com/CraftJarvis(code will be shipped soon)

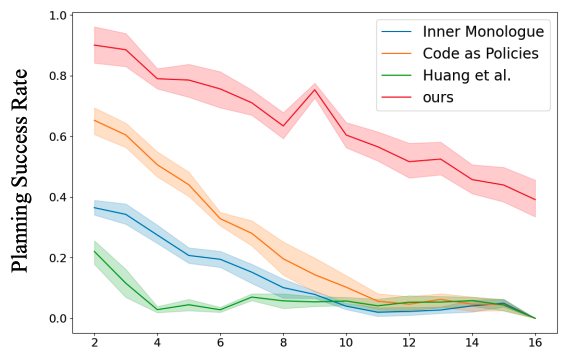

(1/x) so say-can (by @xf1280 @hausman_k @wenlong_huang and other great folks at Brain Robotics) has showed the planning power of LLMs. It would be interested to see how can it be transferred to more open-world game playing. We tried that, but it didn't work that well, why?

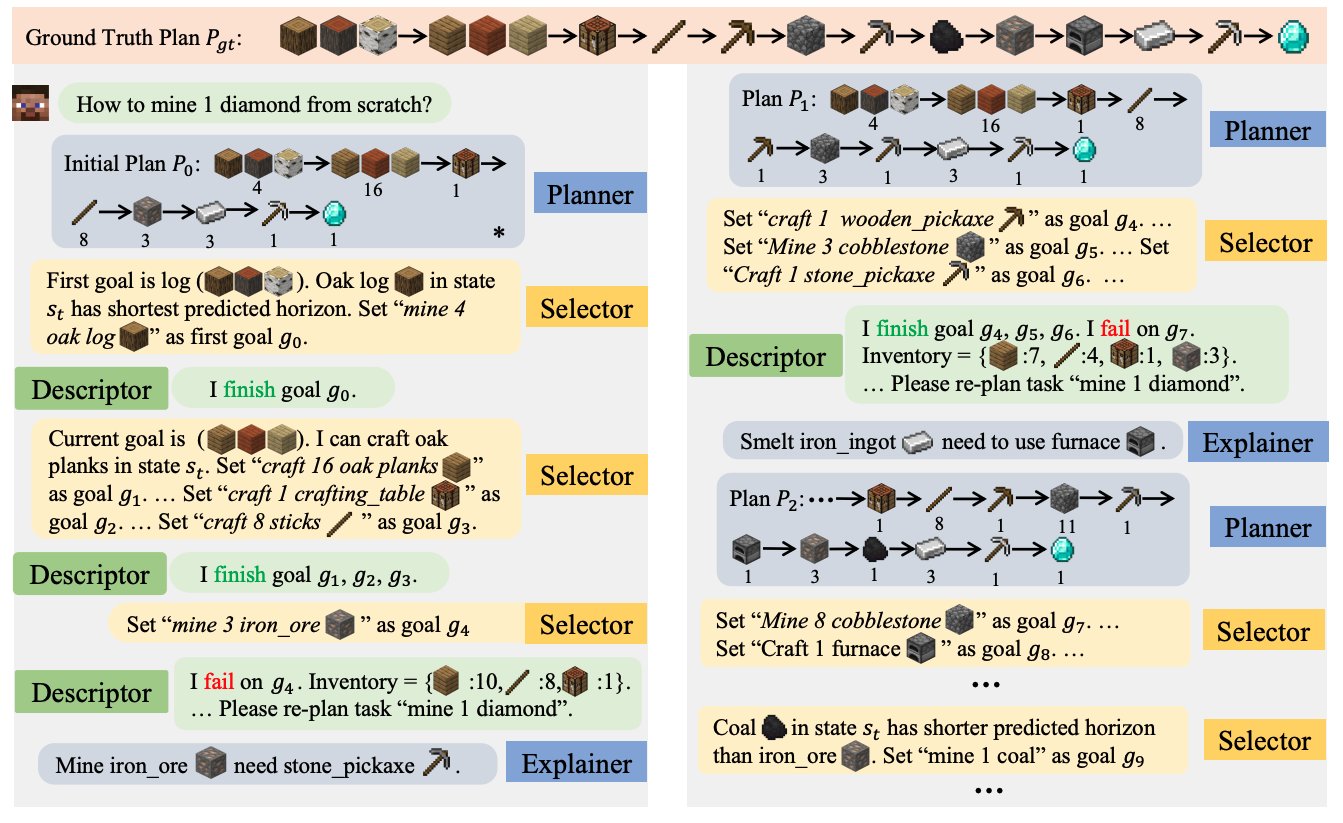

(2/x) Idea is simple: w/o visuals, ChatGPT and other LLMs do not always generate optimal plan to the current situation. We propose **selector** or "goal model", that determine the optimal subgoal to take (ex. hunt sheep or chop tree) via 🔥self-supervised🔥 horizon prediction

(3/x) It turns out that this can be crucial to open-world environment with partial and dynamic observations! Of course, also gearing your prompt up with chain of thoughts, program template and few-shot demos. Here is a dialogue during the mission to diamonds💎💎

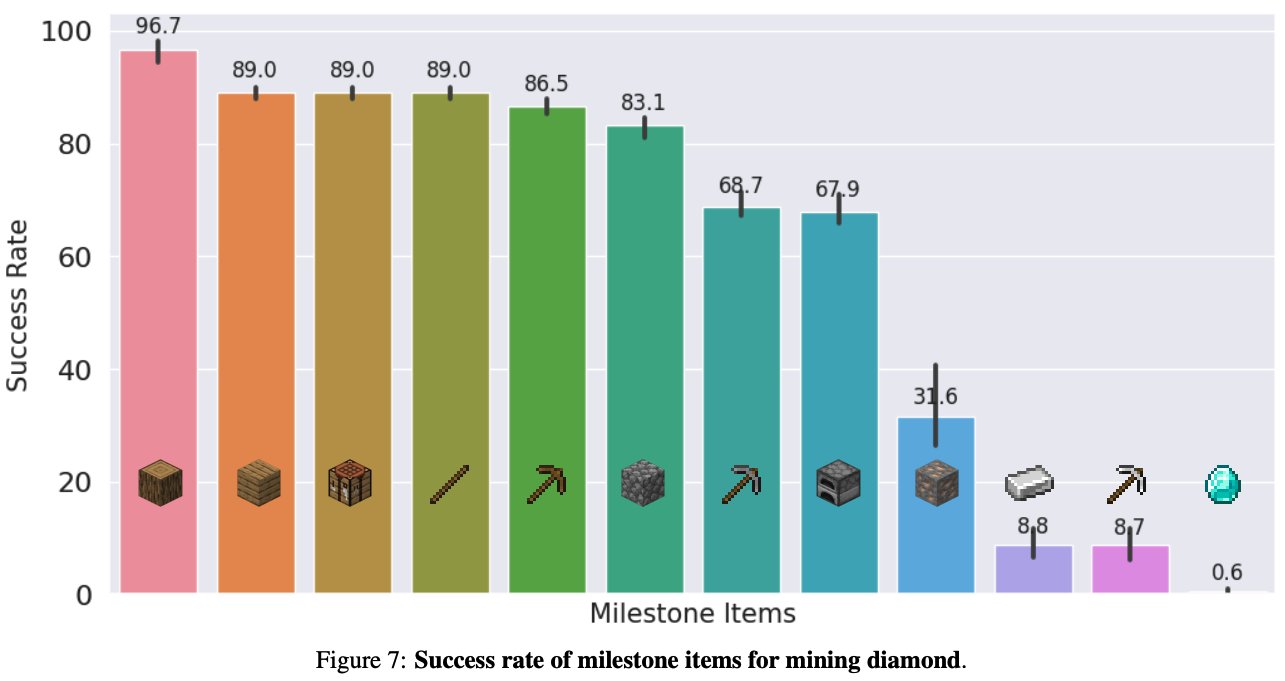

(4/x) We built the **first ever** agent (DEPS) with hierarchical goal executation that can reach the diamonds. Wait...not just that, a language piloted multi-task MC agent would be nice, @DrJimFan? Here you go! It can also robustly accomplish 70+ tasks in the MC universe.

(5/x) 🤔🤔 Some further thoughts: Planning with LLMs is cool. But can we amortize this system-2 thinking in our transformer-based agent so we can eliminate this bottleneck? Lots of exciting research questions to explore here 🚄🚄⚡️⚡️

Wrapping up and shout out to the great CraftJarvis gang: Zihao Wang, Shaofei Cai, Anji Liu, @YitaoLiang

Special thank to @DrJimFan for the inspiring MineDoJo universe and Project Malmö led by @katjahofmann

Mentions

See All

Jim Fan @DrJimFan

·

May 27, 2023

His introductory thread: