Thread by Elad Gil

- Tweet

- Mar 31, 2023

- #ArtificialIntelligence #Computersecurity

Thread

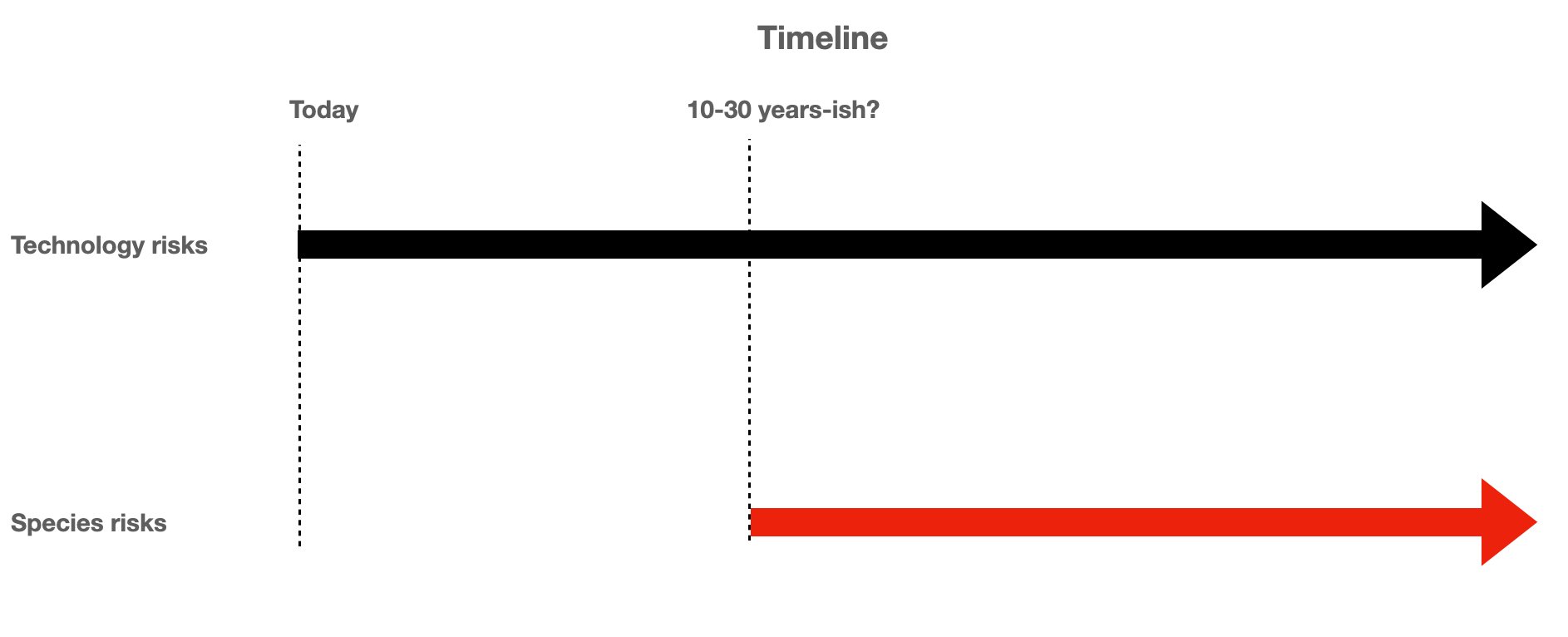

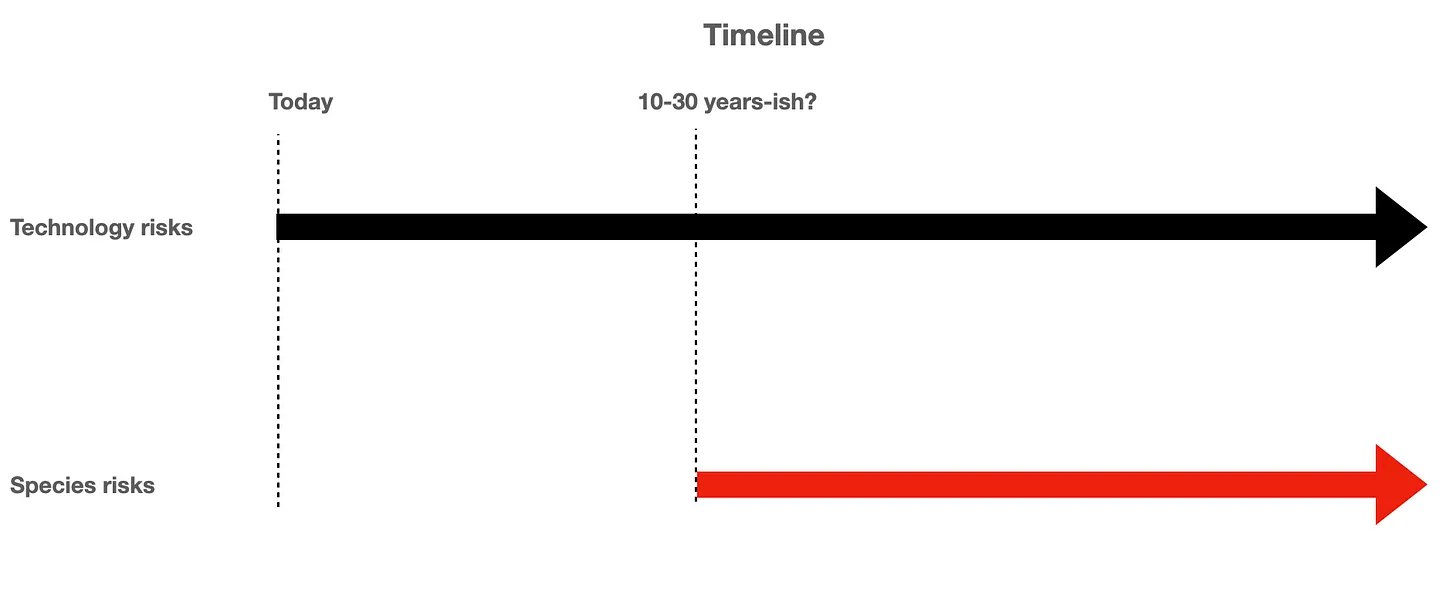

1/N It is useful to distinguish between short term "AI as tech" risks vs longer term "AI as risk to our species"

People tend to confuse these and talk past one another

People tend to confuse these and talk past one another

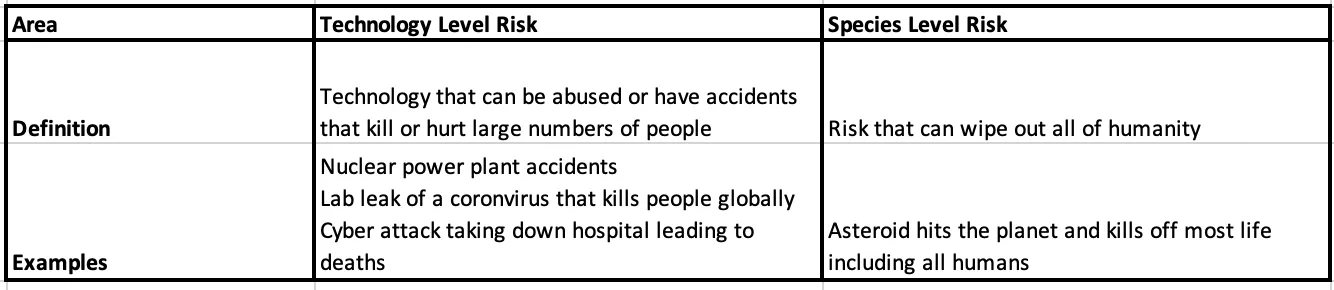

2/N these two types of risk ("Tech risk" vs "Species risk") overlap in time, but tech risk is front loaded

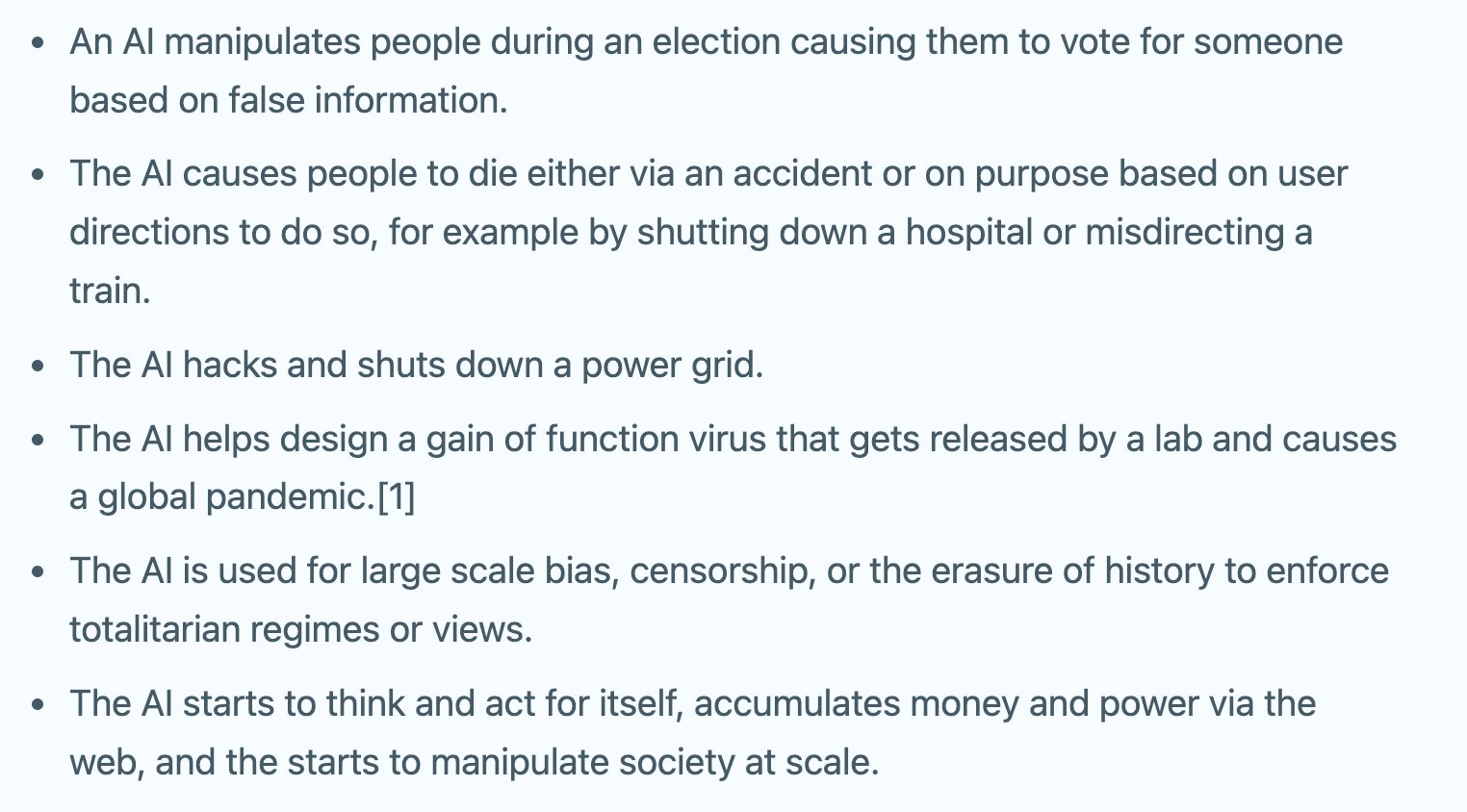

3/N AI as tech risk is usually non-existential, but could still be disasters

Examples may be things like helping design a virus that is released from a lab that kills a bunch of people, or causing a train to crash

Examples may be things like helping design a virus that is released from a lab that kills a bunch of people, or causing a train to crash

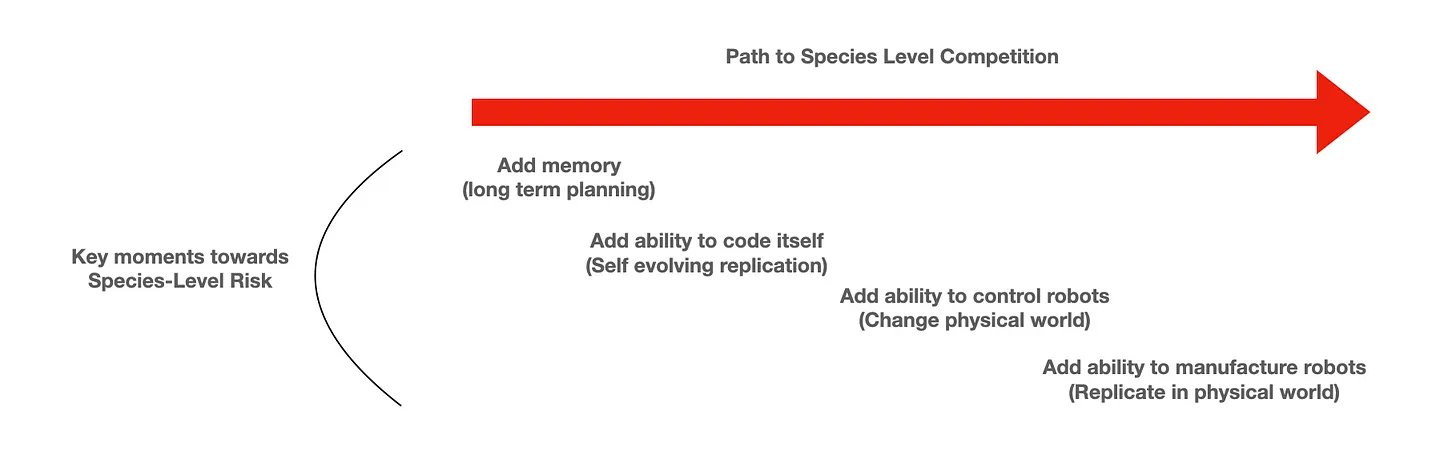

4/N AI as species risk is when do we get into actual competition with AI as a sentient organism?

To get there we probably need AI that:

1. Can code itself (see Github copilot as early example)

2. Advanced robotics, so AI can manipulate the physical world

To get there we probably need AI that:

1. Can code itself (see Github copilot as early example)

2. Advanced robotics, so AI can manipulate the physical world

5/N Robotics is probably the rubicon that is hard to walk back.

Prior to robotics we could shut down data centers and computers

Post robotics there will need to be physical fighting

There will be an enormous economic incentive society to use robots, so this is hard to offset

Prior to robotics we could shut down data centers and computers

Post robotics there will need to be physical fighting

There will be an enormous economic incentive society to use robots, so this is hard to offset

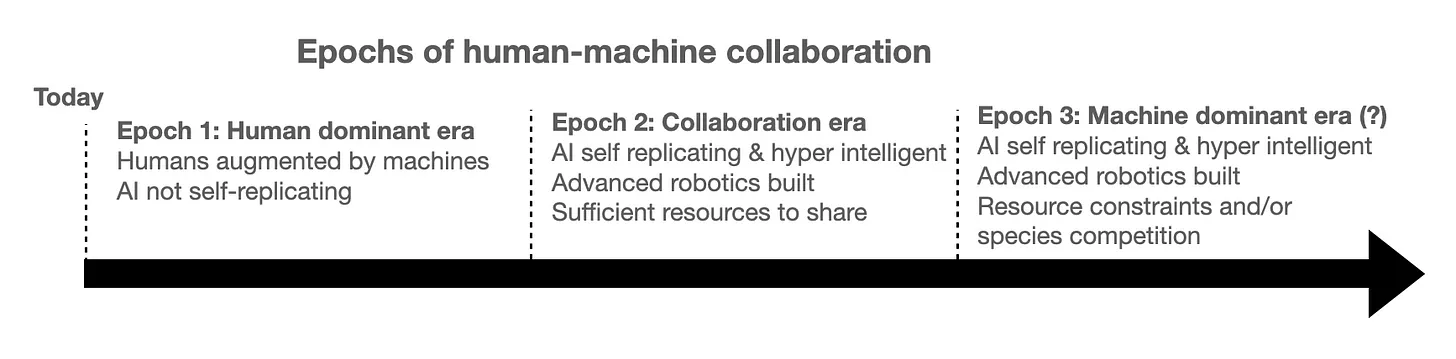

6/N Most likely we will have 3 ages of humankind

1. Human dominant age. Where are today

2. Collaborative era. When AIs work collaboratively with humans but have advanced capabilities

3. Competitive era / machine dominant age

1. Human dominant age. Where are today

2. Collaborative era. When AIs work collaboratively with humans but have advanced capabilities

3. Competitive era / machine dominant age

7/N Competitive age between machines & humans will be due 2 AIs evolving own utility functions-having physical forms & needs

Should land be used to build city or data centers & energy for AI?

Once resources gets scarce & 2 species exist side by side, how to allocate resources?

Should land be used to build city or data centers & energy for AI?

Once resources gets scarce & 2 species exist side by side, how to allocate resources?

8/N There are various ways to approach safety, from "Constitutional AI" to bans on certain tech

As AI world evolves we will learn more about what to do & how to intervene

Now is the wrong time to make rash, knee jerk decisions, but a good time to think deeply about future

As AI world evolves we will learn more about what to do & how to intervene

Now is the wrong time to make rash, knee jerk decisions, but a good time to think deeply about future

Mentions

There are no mentions of this content so far.