Thread by Arya AI Dojo 👩🏻💻🤖

- Tweet

- Apr 11, 2023

- #ArtificialIntelligence #Deeplearning

Thread

The artificial neuron was inspired by biological neurons and back then there was a lot of interest in understanding how the human brain works and coming up with a computational/mathematical model which mimics it.

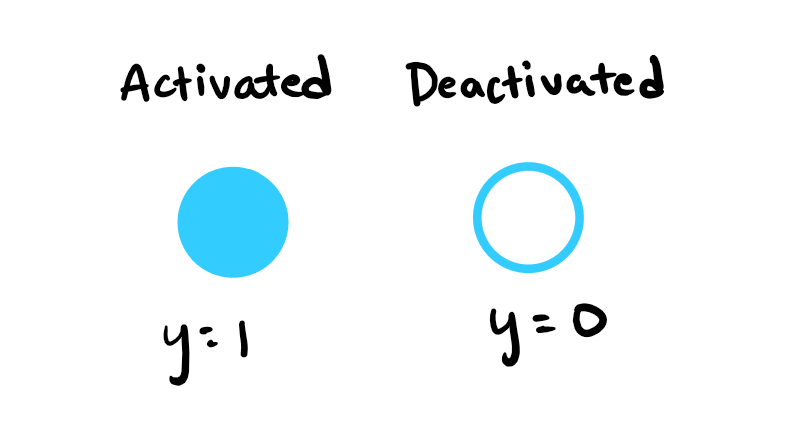

In a Deep Neural Network, these neurons are in a state of activation (1) or deactivation (0)

Whether or not these neurons activate or deactivates is determined by some prior bias/threshold (θ)

Once it exceeds the threshold value the neuron lights up (activation state 1)

Whether or not these neurons activate or deactivates is determined by some prior bias/threshold (θ)

Once it exceeds the threshold value the neuron lights up (activation state 1)

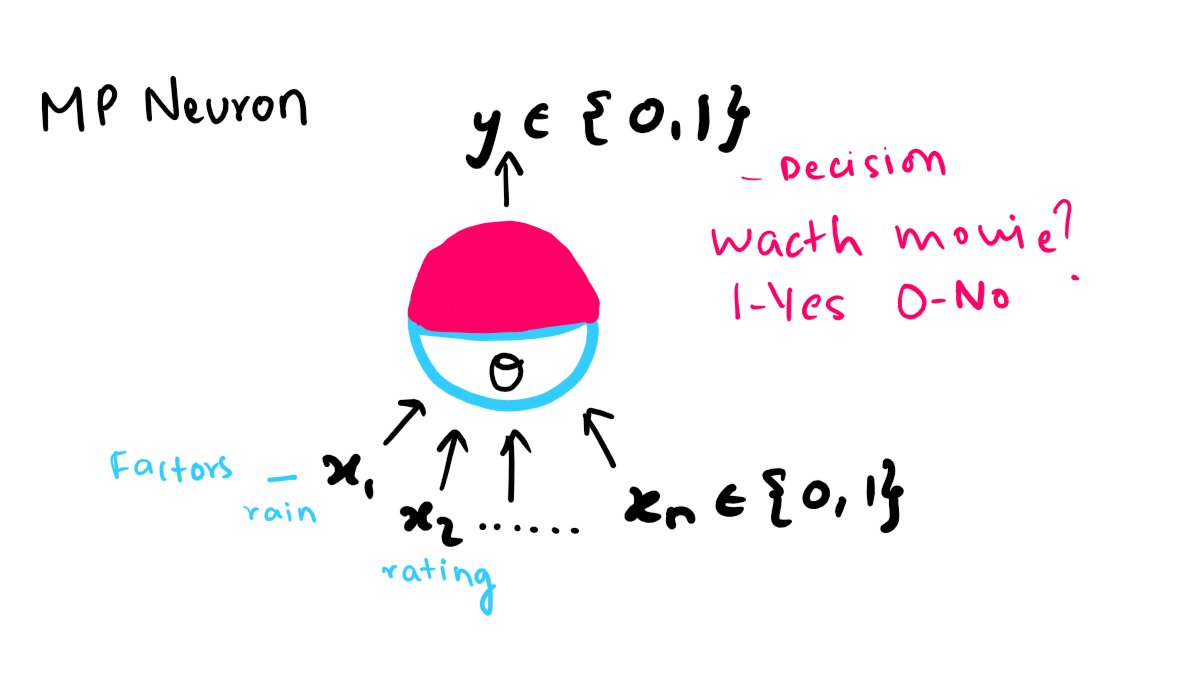

1. McCulloch and Pitts first proposed a simplified computational model of the Neuron

Suppose we want to make a decision to watch a movie or not [1,0]

The neuron takes inputs from factors: rain, rating, etc. [X1, X2,..Xn]

If it exceeds the threshold θ, Y will become 1 (activates)

Suppose we want to make a decision to watch a movie or not [1,0]

The neuron takes inputs from factors: rain, rating, etc. [X1, X2,..Xn]

If it exceeds the threshold θ, Y will become 1 (activates)

This MP Neuron model had limitations

- did not take weights into account (bias/importance of factors)

- only followed boolean logic

- there was no learning mechanism

- threshold was hardcoded

This gave birth to perceptrons!

- did not take weights into account (bias/importance of factors)

- only followed boolean logic

- there was no learning mechanism

- threshold was hardcoded

This gave birth to perceptrons!

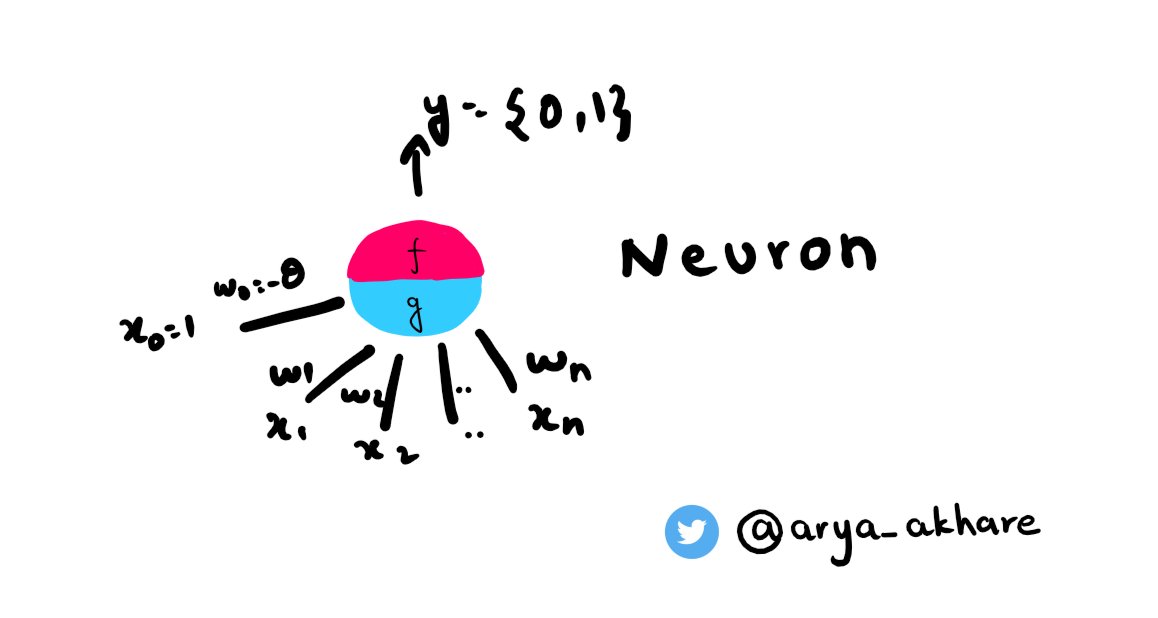

2. Perceptron (Frank Rosenblatt)

Perceptron (artificial neuron) is the basic unit of a neural network.

It is a function that maps its input X

multiplied by the learned weight coefficient W

and generates an output value of Y

Y = W.X

Perceptron (artificial neuron) is the basic unit of a neural network.

It is a function that maps its input X

multiplied by the learned weight coefficient W

and generates an output value of Y

Y = W.X

- has weights

- has a learning mechanism

A single perceptron can only learn linearly separable patterns, but multilayer perceptrons (MLPs) can learn non-linear patterns by stacking multiple layers of perceptrons.

- has a learning mechanism

A single perceptron can only learn linearly separable patterns, but multilayer perceptrons (MLPs) can learn non-linear patterns by stacking multiple layers of perceptrons.

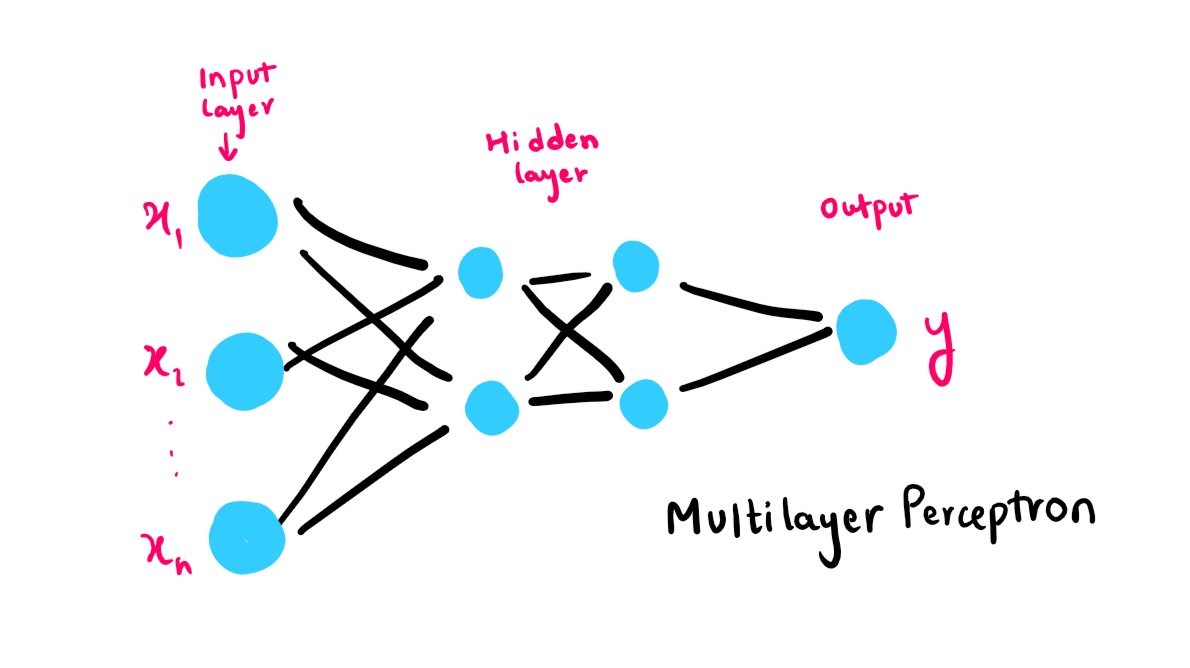

3. Multilayer Perceptron

The multilayer perceptron is a type of artificial neural network that consists of multiple layers of perceptrons. It is capable of handling non-linearly separable problems.

The multilayer perceptron is a type of artificial neural network that consists of multiple layers of perceptrons. It is capable of handling non-linearly separable problems.

Each layer of perceptrons receives input from the previous layer, and the final output is produced by the output layer.

The weights are adjusted using backpropagation, a technique that involves propagating the error from the output layer back to the input layer.

The weights are adjusted using backpropagation, a technique that involves propagating the error from the output layer back to the input layer.

The training of DNNs is typically done using backpropagation, a method for computing the gradient of the error with respect to the weights.

This gradient is used to update the weights in the opposite direction, effectively descending the error surface to find the minimum error.

This gradient is used to update the weights in the opposite direction, effectively descending the error surface to find the minimum error.

Multilayer Perceptrons are used in architecting the DNNs

- has weights + learning mechanism

- goes beyond boolean logic and deals with non-linear functions

- capable of learning complex patterns and have achieved state-of-the-art results in fields like NLP and object recognition

- has weights + learning mechanism

- goes beyond boolean logic and deals with non-linear functions

- capable of learning complex patterns and have achieved state-of-the-art results in fields like NLP and object recognition

Thank you so much for reading to the end!

If this thread was helpful to you:

🔁Retweet↗️Share❤️Like

⏩Follow me @arya_akhare and

🤝Connect with me: linkedin.com/in/arya-akhare

for more content around ML/AI like this.

If this thread was helpful to you:

🔁Retweet↗️Share❤️Like

⏩Follow me @arya_akhare and

🤝Connect with me: linkedin.com/in/arya-akhare

for more content around ML/AI like this.

Mentions

See All

Shubham Saboo @Saboo_Shubham_

·

Apr 11, 2023

Great explanation, nice thread!