Thread by Jeremy Howard

- Tweet

- Apr 3, 2023

- #ComputerProgramming #Naturallanguageprocessing

Thread

There's a lot of folks under the misunderstanding that it's now possible to run a 30B param LLM in <6GB, based on this GitHub discussion.

This is not the case. Understanding why gives us a chance to learn a lot of interesting stuff! 🧵

github.com/ggerganov/llama.cpp/discussions/638

This is not the case. Understanding why gives us a chance to learn a lot of interesting stuff! 🧵

github.com/ggerganov/llama.cpp/discussions/638

The background is that the amazing @JustineTunney wrote this really cool commit for @ggerganov's llama.cpp, which modifies how llama models are loaded into memory to use mmap

github.com/ggerganov/llama.cpp/commit/5b8023d935401072b73b63ea995aaae040d57b87

github.com/ggerganov/llama.cpp/commit/5b8023d935401072b73b63ea995aaae040d57b87

Prior to this, llama.cpp (and indeed most deep learning frameworks) load the weights of a neural network by reading the file containing the weights and copying the contents into RAM. This is wasteful since a lot of bytes are moving around before you can even use the model

There's a *lot* of bytes to move and store, since a 30B model has (by definition!) around 30 billion parameters. If they've been squished down to just 4-bit ints, that's still 30*4/8=15 billion bytes just for the model. Then we need memory for the data and activations too!

mmap is a really nifty feature of modern operating systems which lets you not immediately read a file into memory, but instead just tell the OS you'd like to access some bits in that file sometime, and you can just grab the bits you need if/when you need them

The OS is pretty amazing at handling these requests. If you ask for the same bits twice, it won't re-read the disk, but instead will re-use the bits that are already in RAM, is possible. This is the "cache".

Early responses to the issue were under the mistaken impression that following the mmap commit, llama.cpp was now only "paging in" (i.e. reading from disk) a subset of the model, by grabbing only the parameters needed for a particular batch of data

This is based on a misunderstanding of how transformers models work. Transformers are very simple models. They are functions which apply the following two operations a bunch of times:

- An MLP with 1 hidden layer

- Attention

- An MLP with 1 hidden layer

- Attention

An MLP (multi-layer) perceptron with one hidden layer is a matrix multiplication followed by an activation function (an elementwise operation such as ReLU: `max(0,x)`), followed by another matrix multiplication.

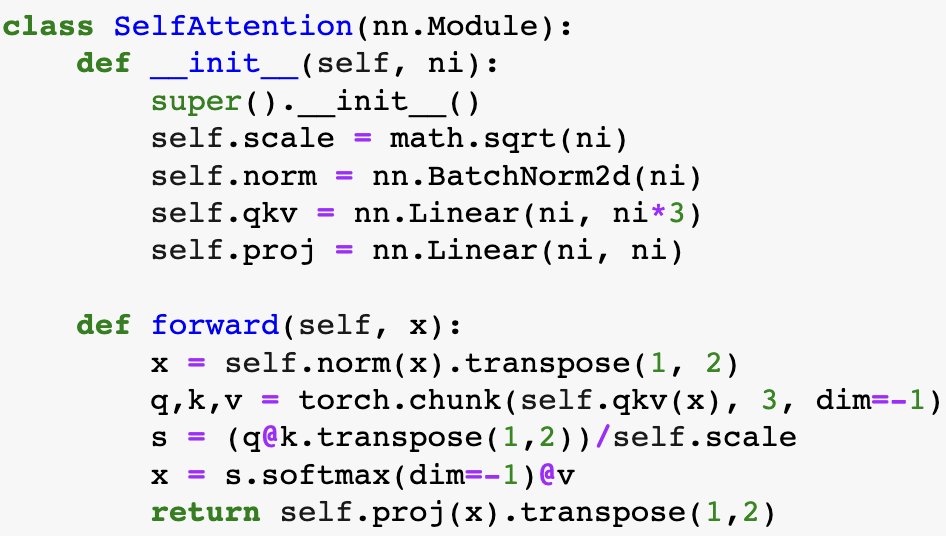

Attention is the operation shown in this code snippet. This one does "self attention" (i.e q, k, and v are all applied to the same input); there's also "cross attention" that uses different inputs.

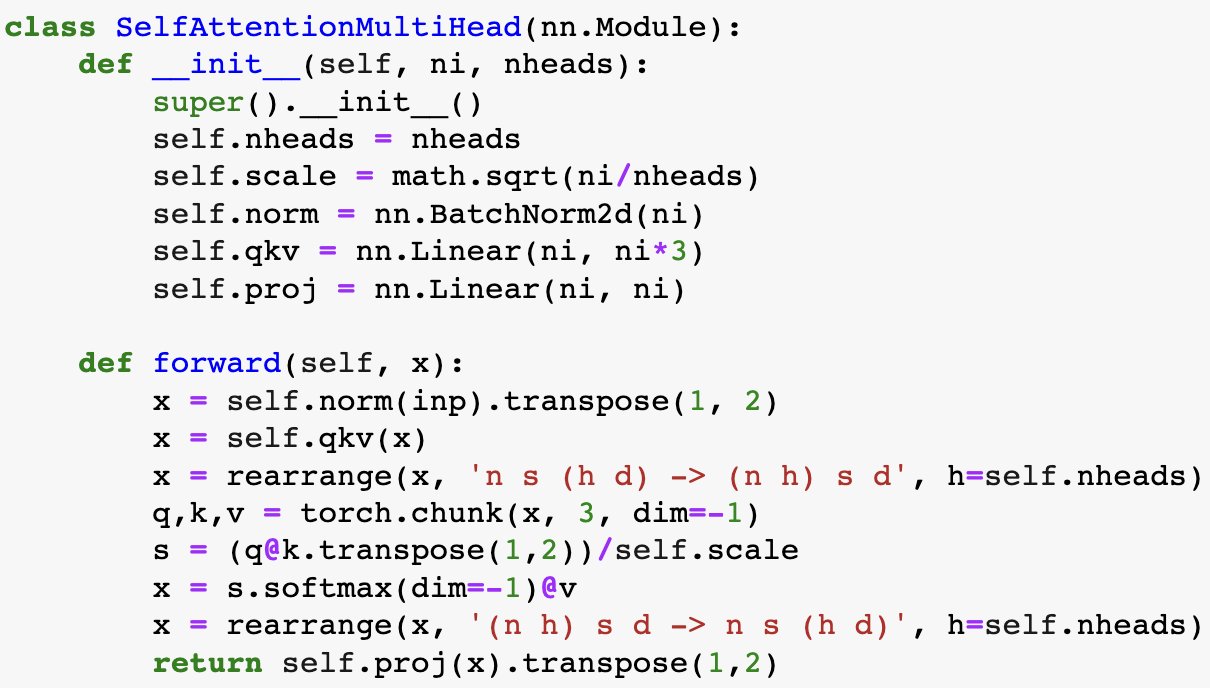

OK that was a slight simplification. Transformers actually use "multi-head" attention which groups channels first. But as you see from the implementation below, it's still just using some matrix multiplies and activation functions.

So every parameter is used, because they're just matrix multiplications and element-wise operations that they're used in. There's no true sparsity occurring here -- there's just some "soft attention" caused by the use of softmax.

Therefore, we don't really get to benefit fully from the magic of mmap, since we need all those parameters for every batch of data. It's still going to save some unnecessary memory copying so it's probably a useful thing to use here, but we still need RAM for all the parameters

The way mmap handles memory makes it a bit tricky for the OS to report on a per-process level how that memory is being used. So it generally will just show it as "cache" use. That's why (AFAICT) there was some initial misunderstanding regarding the memory savings

One interesting point though (mentioned in the GH issue) is that you can now have multiple processes all using the same model, and they'll share the memory -- pretty cool!

Note however that none of this helps us use the GPU better -- it's just for CPU use (or systems with integrated memory like Apple silicon).

By far the best speed is with an NVIDIA GPU, and that still requires copying all the params over to the card before using the model.

By far the best speed is with an NVIDIA GPU, and that still requires copying all the params over to the card before using the model.

Hopefully you found that a useful overview of mmap and transformers. BTW these topics are covered in depth in our upcoming course (the above code is copied from it) which will be released in the next few days, so keep an eye out for that!

h/t @iScienceLuvr for encouraging me to write this thread.

Minor correction: the normalization layer I used here, Batchnorm2D, isn't what's used in a normal language model. I took the code from an image model we built for the course. In most language models we'd use Layernorm for normalization.

D'oh I meant to mention this and forgot!

This is a great point. The first layer of a language model is an "embedding" layer. Mathematically, it's matrix multiply by a bunch of one-hot encoded vectors. But it's implemented as an array index lookup.

This is a great point. The first layer of a language model is an "embedding" layer. Mathematically, it's matrix multiply by a bunch of one-hot encoded vectors. But it's implemented as an array index lookup.

Depending on how it's implemented, it's possible that this could avoid reading some of the embedding weights into memory. However, the OS has a minimum amount of stuff it reads at once around a location, so it might still end up reading most or all of the embeddings.

Having said that, the embedding weights are a fairly small proportion of the model parameters, so this won't generally make a big difference either way.

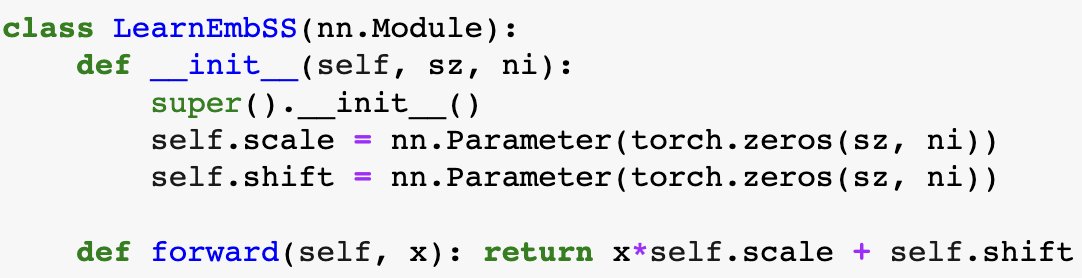

Oops another correction! I forgot to mention positional encoding. This is the layer after the embeddings. It can be implemented using sin/cos across a few freqs ("sinusoidal embeddings") or the code snippet below ("learnable embeddings").

Positional encoding is used to assign a unique vector to each position in the input token vector, so that the model can learn that the location of a words matters -- not just its presence/absence.

(They have few parameters do don't impact memory significantly.)

(They have few parameters do don't impact memory significantly.)

Mentions

See All

Amjad Masad ⠕ @amasad

·

Apr 3, 2023

Great thread by Jeremy on the subject!