Thread by jonstokes(\.com|\.eth)

- Tweet

- Mar 30, 2023

- #ArtificialIntelligence

Thread

In which I survey the AI safety wars through the lens of the recent AI letter.

www.jonstokes.com/p/ai-safety-a-technical-and-ethnographic

www.jonstokes.com/p/ai-safety-a-technical-and-ethnographic

I take a look at what @sama said on a recent @lexfridman podcast, along with what he's said consistently, and conclude that the whole issue as it's framed in the letter is a hot mess. You either pause all AI development, or gain-of-function proceeds. You can't isolate GoF out.

I think the letter's main ask: that we pause this one specific type of research (gain of function) but keep doing other AI stuff, is fundamentally nonsensical. It doesn't even scan.

Seriously, no part of this makes any sense. I don't think I'm being uncharitable, either. It's just a shambles.

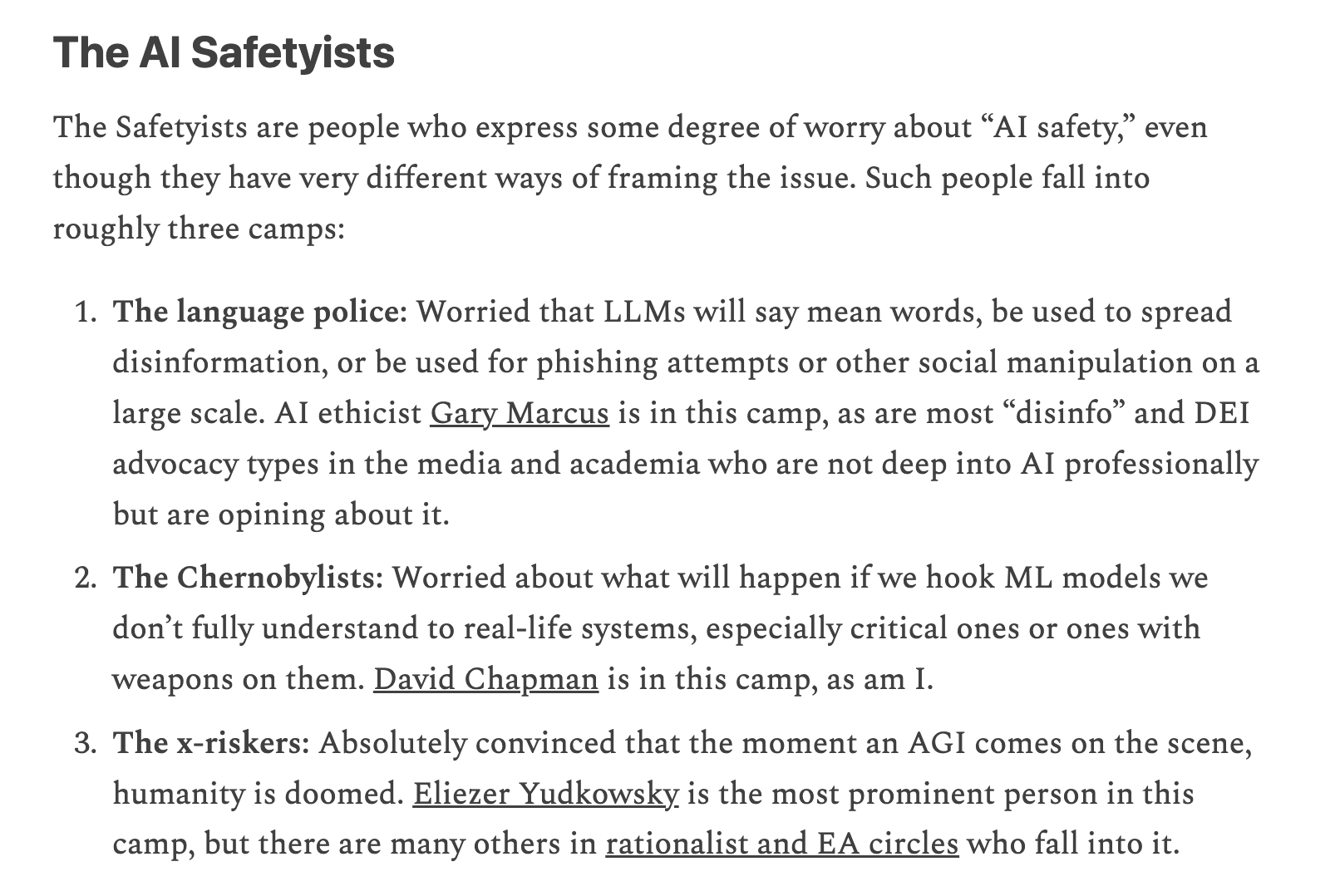

In the ethnography part, I start out with the AI safetyists, and I even locate myself here in the middle category:

Then there are the Boomers, which you can read about in the pice, and finally, my favorite, the Intelligence Deniers.