Ways to think about machine learning

Everyone has heard of machine learning now, and every big company is

working on projects around ‘AI’. We know this is a Next Big Thing. But we

don’t yet have a settled sense of quite what machine learning means - what

it will mean for tech companies or for companies in the broader economy,

how to think structurally about what new things it could enable, and what

important problems it might actually be able to solve.

We're now four or five years into the current explosion of machine learning, and pretty much everyone has heard of it. It's not just that startups are forming every day or that the big tech platform companies are rebuilding themselves around it - everyone outside tech has read the Economist or BusinessWeek cover story, and many big companies have some projects underway. We know this is a Next Big Thing.

Going a step further, we mostly understand what neural networks might be, in theory, and we get that this might be about patterns and data. Machine learning lets us find patterns or structures in data that are implicit and probabilistic (hence ‘inferred’) rather than explicit, that previously only people and not computers could find. They address a class of questions that were previously ‘hard for computers and easy for people’, or, perhaps more usefully, ‘hard for people to describe to computers’. And we’ve seen some cool (or worrying, depending on your perspective) speech and vision demos.

I don't think, though, that we yet have a settled sense of quite what machine learning means - what it will mean for tech companies or for companies in the broader economy, how to think structurally about what new things it could enable, or what machine learning means for all the rest of us, and what important problems it might actually be able to solve.

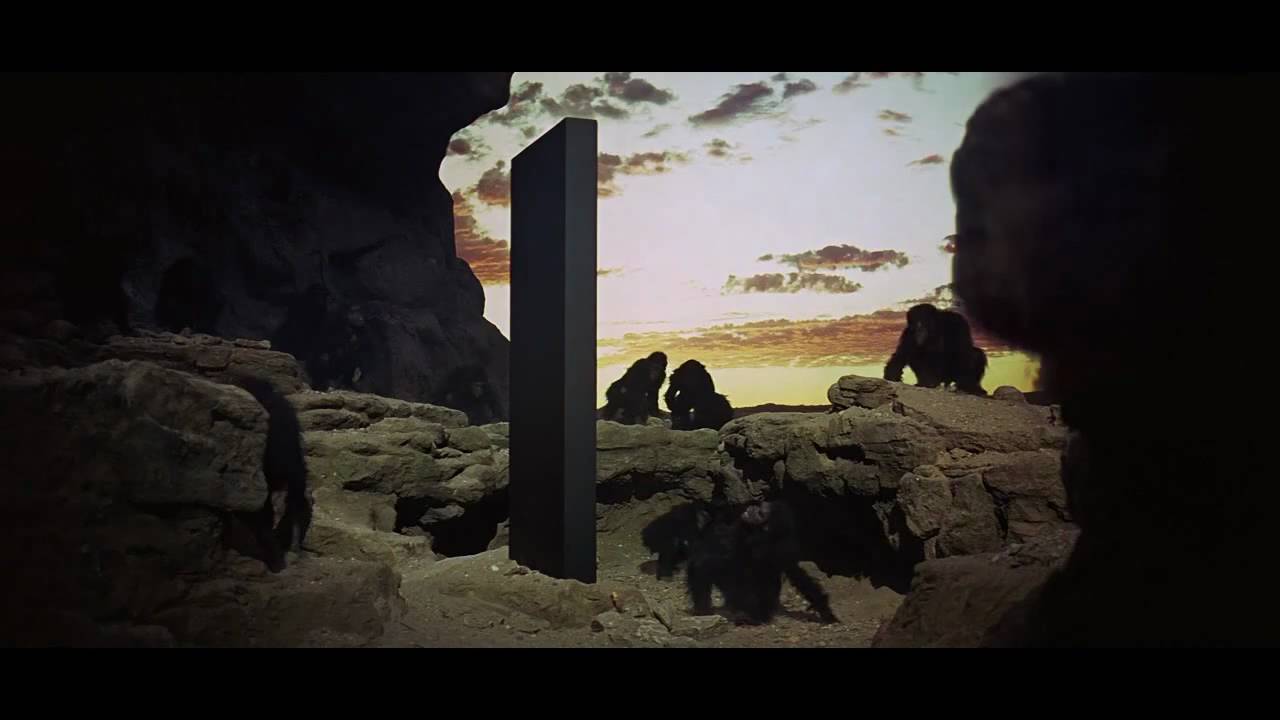

This isn't helped by the term 'artificial intelligence', which tends to end any conversation as soon as it's begun. As soon as we say 'AI', it's as though the black monolith from the beginning of 2001 has appeared, and we all become apes screaming at it and shaking our fists. You can’t analyze ‘AI’.

Indeed, I think one could propose a whole list of unhelpful ways of talking about current developments in machine learning. For example:

Data is the new oil

Google and China (or Facebook, or Amazon, or BAT) have all the data

AI will take all the jobs

And, of course, saying AI itself.

More useful things to talk about, perhaps, might be:

Automation

Enabling technology layers

Relational databases.

Why relational databases? They were a new fundamental enabling layer that changed what computing could do. Before relational databases appeared in the late 1970s, if you wanted your database to show you, say, 'all customers who bought this product and live in this city', that would generally need a custom engineering project. Databases were not built with structure such that any arbitrary cross-referenced query was an easy, routine thing to do. If you wanted to ask a question, someone would have to build it. Databases were record-keeping systems; relational databases turned them into business intelligence systems.

This changed what databases could be used for in important ways, and so created new use cases and new billion dollar companies. Relational databases gave us Oracle, but they also gave us SAP, and SAP and its peers gave us global just-in-time supply chains - they gave us Apple and Starbucks. By the 1990s, pretty much all enterprise software was a relational database - PeopleSoft and CRM and SuccessFactors and dozens more all ran on relational databases. No-one looked at SuccessFactors or Salesforce and said "that will never work because Oracle has all the database" - rather, this technology became an enabling layer that was part of everything.

So, this is a good grounding way to think about ML today - it’s a step change in what we can do with computers, and that will be part of many different products for many different companies. Eventually, pretty much everything will have ML somewhere inside and no-one will care.

An important parallel here is that though relational databases had economy of scale effects, there were limited network or ‘winner takes all’ effects. The database being used by company A doesn't get better if company B buys the same database software from the same vendor: Safeway's database doesn't get better if Caterpillar buys the same one. Much the same actually applies to machine learning: machine learning is all about data, but data is highly specific to particular applications. More handwriting data will make a handwriting recognizer better, and more gas turbine data will make a system that predicts failures in gas turbines better, but the one doesn't help with the other. Data isn’t fungible.

This gets to the heart of the most common misconception that comes up in talking about machine learning - that it is in some way a single, general purpose thing, on a path to HAL 9000, and that Google or Microsoft have each built *one*, or that Google 'has all the data', or that IBM has an actual thing called ‘Watson’. Really, this is always the mistake in looking at automation: with each wave of automation, we imagine we're creating something anthropomorphic or something with general intelligence. In the 1920s and 30s we imagined steel men walking around factories holding hammers, and in the 1950s we imagined humanoid robots walking around the kitchen doing the housework. We didn't get robot servants - we got washing machines.

Washing machines are robots, but they're not ‘intelligent’. They don't know what water or clothes are. Moreover, they're not general purpose even in the narrow domain of washing - you can't put dishes in a washing machine, nor clothes in a dishwasher (or rather, you can, but you won’t get the result you want). They're just another kind of automation, no different conceptually to a conveyor belt or a pick-and-place machine. Equally, machine learning lets us solve classes of problem that computers could not usefully address before, but each of those problems will require a different implementation, and different data, a different route to market, and often a different company. Each of them is a piece of automation. Each of them is a washing machine.

Hence, one of the challenges in talking about machine learning is to find the middle ground between a mechanistic explanation of the mathematics on one hand and fantasies about general AI on the other. Machine learning is not going to create HAL 9000 (at least, very few people in the field think that it will do so any time soon), but it’s also not useful to call it ‘just statistics’. Returning to the parallels with relational databases, this might be rather like talking about SQL in 1980 - how do you get from explaining table joins to thinking about Salesforce.com? It's all very well to say 'this lets you ask these new kinds of questions', but it isn't always very obvious what questions. You can do impressive demos of voice recognition and image recognition, but again, what would a normal company do with that? As a team at a major US media company said to me a while ago: 'well, we know we can use ML to index ten years of video of our talent interviewing athletes - but what do we look for?’

What, then, are the washing machines of machine learning, for real companies? I think there are two sets of tools for thinking about this. The first is to think in terms of a procession of types of data and types of question:

Machine learning may well deliver better results for questions you're already asking about data you already have, simply as an analytic or optimization technique. For example, our portfolio company Instacart built a system to optimize the routing of its personal shoppers through grocery stores that delivered a 50% improvement (this was built by just three engineers, using Google's open-source tools Keras and Tensorflow).

Machine learning lets you ask new questions of the data you already have. For example, a lawyer doing discovery might search for 'angry’ emails, or 'anxious’ or anomalous threads or clusters of documents, as well as doing keyword searches,

Third, machine learning opens up new data types to analysis - computers could not really read audio, images or video before and now, increasingly, that will be possible.

Within this, I find imaging much the most exciting. Computers have been able to process text and numbers for as long as we’ve had computers, but images (and video) have been mostly opaque. Now they’ll be able to ‘see’ in the same sense as they can ‘read’. This means that image sensors (and microphones) become a whole new input mechanism - less a ‘camera’ than a new, powerful and flexible sensor that generates a stream of (potentially) machine-readable data. All sorts of things will turn out to be computer vision problems that don’t look like computer vision problems today.

This isn’t about recognizing cat pictures. I met a company recently that supplies seats to the car industry, which has put a neural network on a cheap DSP chip with a cheap smartphone image sensor, to detect whether there’s a wrinkle in the fabric (we should expect all sorts of similar uses for machine learning in very small, cheap widgets, doing just one thing, as described here). It’s not useful to describe this as ‘artificial intelligence’: it’s automation of a task that could not previously be automated. A person had to look.

This sense of automation is the second tool for thinking about machine learning. Spotting whether there’s a wrinkle in fabric doesn't need 20 years of experience - it really just needs a mammal brain. Indeed, one of my colleagues suggested that machine learning will be able to do anything you could train a dog to do, which is also a useful way to think about AI bias (What exactly has the dog learnt? What was in the training data? Are you sure? How do you ask?), but also limited because dogs do have general intelligence and common sense, unlike any neural network we know how to build. Andrew Ng has suggested that ML will be able to do anything you could do in less than one second. Talking about ML does tend to be a hunt for metaphors, but I prefer the metaphor that this gives you infinite interns, or, perhaps, infinite ten year olds.

Five years ago, if you gave a computer a pile of photos, it couldn’t do much more than sort them by size. A ten year old could sort them into men and women, a fifteen year old into cool and uncool and an intern could say ‘this one’s really interesting’. Today, with ML, the computer will match the ten year old and perhaps the fifteen year old. It might never get to the intern. But what would you do if you had a million fifteen year olds to look at your data? What calls would you listen to, what images would you look at, and what file transfers or credit card payments would you inspect?

That is, machine learning doesn't have to match experts or decades of experience or judgement. We’re not automating experts. Rather, we’re asking ‘listen to all the phone calls and find the angry ones’. ‘Read all the emails and find the anxious ones’. ‘Look at a hundred thousand photos and find the cool (or at least weird) people’.

In a sense, this is what automation always does; Excel didn't give us artificial accountants, Photoshop and Indesign didn’t give us artificial graphic designers and indeed steam engines didn’t give us artificial horses. (In an earlier wave of ‘AI’, chess computers didn’t give us a grumpy middle-aged Russian in a box.) Rather, we automated one discrete task, at massive scale.

Where this metaphor breaks down (as all metaphors do) is in the sense that in some fields, machine learning can not just find things we can already recognize, but find things that humans can’t recognize, or find levels of pattern, inference or implication that no ten year old (or 50 year old) would recognize. This is best seen Deepmind’s AlphaGo. AlphaGo doesn’t play Go the way the chess computers played chess - by analysing every possible tree of moves in sequence. Rather, it was given the rules and a board and left to try to work out strategies by itself, playing more games against itself than a human could do in many lifetimes. That is, this not so much a thousand interns as one intern that’s very very fast, and you give your intern 10 million images and they come back and say ‘it’s a funny thing, but when I looked at the third million images, this pattern really started coming out’. So, what fields are narrow enough that we can tell an ML system the rules (or give it a score), but deep enough that looking at all of the data, as no human could ever do, might bring out new results?

I spend quite a lot of time meeting big companies and talking about their technology needs, and they generally have some pretty clear low hanging fruit for machine learning. There are lots of obvious analysis and optimisation problems, and plenty of things that are clearly image recognition problems or audio analysis questions. Equally, the only reason we’re talking about autonomous cars and mixed reality is because machine learning (probably) enables them - ML offers a path for cars to work out what’s around them and what human drivers might be going to do, and offers mixed reality a way to work out what I should be seeing, if I’m looking though a pair of glasses that could show anything. But after we’ve talked about wrinkles in fabric or sentiment analysis in the call center, these companies tend to sit back and ask, ‘well, what else?’ What are the other things that this will enable, and what are the unknown unknowns that it will find? We’ve probably got ten to fifteen years before that starts getting boring.