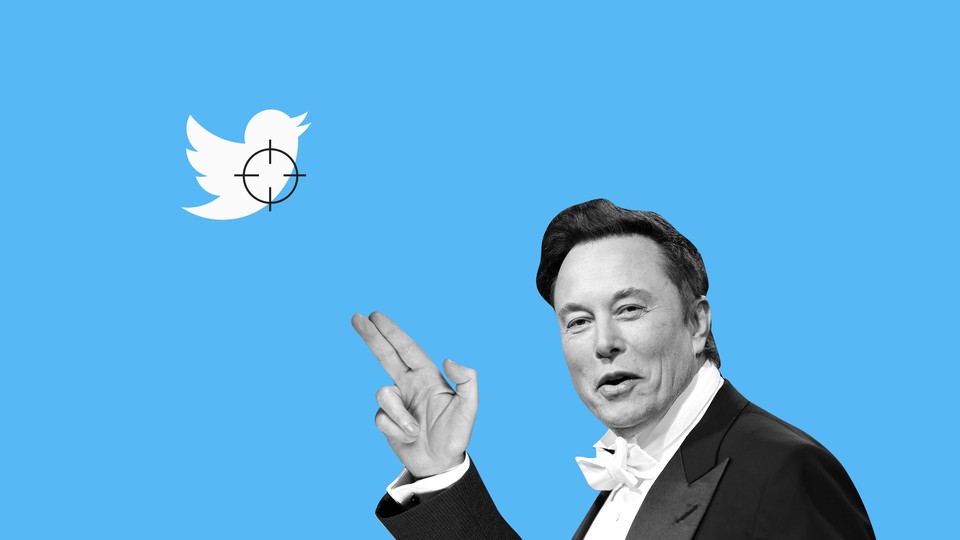

How Elon Musk Could Actually Kill Twitter

There’s more than one way to sink a social network.

Updated at 9:35 p.m. ET on October 27, 2022

Sign up for Charlie’s newsletter, Galaxy Brain, here.

Journalists have been declaring Twitter dead for nearly a decade. Observers see flagging user numbers or feel an amorphous, grim vibe shift and pounce, often prematurely. But this week, everyone is fretting and monitoring. Tonight, Elon Musk reportedly took control of Twitter, firing CEO Parag Agrawal and other executives, including Vijaya Gadde, the head of legal, policy, and trust. There is, both inside and outside the company, an apocalyptic feel to the ordeal.

Earlier, Musk wandered around Twitter’s headquarters in San Francisco, carrying a porcelain sink (for content purposes) while simultaneously trying to convince employees that he will not, as previously reported, cut 75 percent of staff. One current Twitter staffer told me that “the bootlicking [was] next level” as anxious employees greeted Musk in the hallway, unsure of his plans for his new company and their place in it.

Outside the company, power users are mulling plans to bail, and sharing a report that Twitter is already on life support. My timelines are full of earnest eulogies for the platform or fears that it will turn into a 4chan clone now that Musk is taking the reins. People are waxing nostalgic, sharing greatest-hits threads of good tweets. Dara Lind, a reporter, summed it up succinctly, noting that the whole thing has “big, big last-night-of-camp energy.”

It seems foolish to try to predict what a mercurial person like Musk—who loves to troll and to float ridiculous ideas in public—will actually do to the platform. But it is impossible to ignore that his tenure is an inflection point for the company and, perhaps, for the 2.0 generation of social-media companies, which have been battered by misinformation, a techlash, and changing online behaviors. Platforms and networks rise and fall and even die out naturally—just look at MySpace—but there’s not much precedent for what’s happening with Twitter: A culturally resonant and politically influential platform could, quite suddenly, flame out as the result of new ownership.

Naturally, this has led me to wonder, and to ask those with experience at large platforms, what could Elon Musk actually do to kill Twitter?

Those I spoke with agreed that Musk likely couldn’t flip a proverbial switch to destroy the platform immediately. Any harebrained, Muskian idea for a new feature couldn’t get implemented overnight. One former senior employee I spoke with also argued that high-profile, controversial decisions (like the reinstatement of Donald Trump or Alex Jones) would certainly drive some people off the service but would be unlikely, on their own, to cause a mass exodus. They cited past mass-quit movements like #DeleteFacebook and #DeleteUber as historical analogues, suggesting that it’s pretty hard to get huge numbers of people to log off as part of a moral stand. That said, Twitter already appears to be hemorrhaging power users, and it’s unclear how much more the platform can take.

But Musk could certainly kneecap Twitter via inept management. If he really does cut a significant chunk of Twitter staff, that would cause an organizational nightmare. Even if one assumes there’s bloat in the company, former employees argued that Twitter could still lose all kinds of institutional knowledge in the shuffle. That institutional knowledge would be useful in a crisis—the kind that social-media companies have all the time, such as when high-profile users go renegade, or the site goes down, or traffic unexpectedly surges. Those I spoke with were especially worried about losing site-reliability engineers and members of the internal trust-and-safety team, which handles content moderation.

Even if Musk’s cuts don’t affect these departments, his ownership could possibly trigger a wave of resignations from employees in key infrastructural roles.

“These sites—no matter how talented the engineering organization—are often held together by a series of fragile, legacy systems, the precise functioning of which is only truly known to a few people,” Jason Goldman, a member of Twitter’s early team, a former board member, and the company’s former vice president of product, told me. “Without even factoring in nefarious intent, it is easy to imagine scenarios where big mistakes happen because of the kind of disruption Twitter is about to endure. The exact nature of the mistake is impossible to predict, but the increased likelihood of a mistake happening is a reasonable assumption. And it’s more likely to be from some small error that compounds than it is from the large decisions that often end up in the spotlight.”

Sources described a few nightmare scenarios that could legitimately hobble Twitter, which is still used by more than 200 million people every day:

1. Outside hackers and/or hostile foreign governments focus their hacking efforts on Twitter. Because of the massive layoffs and org-chart chaos, Twitter is unable to adequately address the attacks, causing catastrophic breaches, loss of personal information, or extended outages.

2. A stripped-down trust-and-safety team is unable to deal with government subpoenas or complex law-enforcement requests. A bare-bones team might, for example, accidentally assist outside efforts to identify anonymous dissidents and activists in foreign countries.

3. The trust-and-safety team is unable to stop coordinated efforts from fraudsters orchestrating low-level scams. Similarly, a strapped trust-and-safety department is unable to combat or monitor child-sexual-abuse material, sex-trafficking efforts, nonconsensual pornography, and copyright violations.

4. An inexperienced engineer pushes some buggy code and part of the site’s functionality goes down, but the people with expertise in that area of site reliability are not there to help restore it.

5. Musk does indeed roll back Twitter’s content-moderation rules and reduces tools for monitoring and reporting abuse on the platform. As Kate Klonick, an associate professor at St. John’s University Law School who studies content moderation, argued recently, a lack of speech governance, or a dismantled trust-and-safety apparatus, will result in a bad product, less engagement, lower ad revenue for the company, and, ultimately, more radicalized communities.

These scenarios are hypothetical, but they illustrate a truism about platforms: They do not run themselves. They are made up of humans, many of whom have complex jobs overseeing niche parts of the social network, much of which is unseen to the average user.

One former trust-and-safety engineer for a large social network told me that many elements of the job that seem boring or straightforward are actually incredibly fraught, like how to define and take action on different kinds of spam. Trust-and-safety officers in charge of such efforts aren’t just dealing with Viagra ads or crypto-scam bots; they’re figuring out how to handle bulk messages from legitimate political organizers exploiting the platforms for mass messaging. As one person put it, there are good actors and bad actors and also “spammy but not necessarily malicious businesses trying to get you to buy things in between, and all those things can look very similar to machine-learning models.”

Those with trust-and-safety experience at the platform told me that a big percentage of the job is dealing with the messy edge cases that are difficult for a computer to decipher. Programs might be able to address specific product quirks if a user files a clear help ticket reporting an obvious problem. “But if I wrote in, ‘My account has been hacked because it “accidentally” liked a porn tweet on 9/11 and I’m U.S. Senator Ted Cruz,’ that’s going to be a lot for a computer to unpack,” Brian Truebe, a former Twitter trust-and-safety professional, told me over email.

“A lot of things humans say and do are only easily interpretable/decoded by other humans,” he continued. “And when all speech is happening in a few places, those few places need more humans to review, not fewer.”

Reactionary tech figures such as Musk like to imply that content-moderation teams act as a kind of thought police. But these teams largely work on protecting users’ privacy, complying with laws, or keeping the site from becoming overrun by the kind of spam that no human wants to encounter. “To really have a robust security-and-abuse team, you need a massive amount of actual humans to respond and filter things that need to be filtered out,” Southey Blanton, a systems technician who worked in trust and safety at MySpace, told me. Blanton said that cuts to his team led to a skeleton crew of moderators, who had to rely on imprecise AI tools to get rid of bots and spam—which led to many legitimate human accounts getting banned as well. “Overall, a social-media site is under attack, as well as being overwhelmed, basically 24/7, 365,” he said. “I am fully convinced that if Musk does what he is saying he will do, it will be an absolute shitshow.”

Klonick echoed the sentiment. “Language and the meaning of language always evolves, but on the internet, that happens a billion times faster,” she told me. “And if what online speech governance does is manage the harms of how people communicate, it has to be constantly working and changing. It’s not like an oil change.”

Even under leadership that values moderation, Twitter isn’t exactly known for peace and harmony. There are numerous reasons for this. The tech journalist Ryan Broderick suggested in his newsletter that “Twitter has never been able to deal with the fact its users both hate using it and also hate each other,” and that the platform’s architecture causes such frequent context collapse and infighting that its least aggressive and obnoxious users tend to leave or just lurk. If Twitter is struggling with this now, imagine the impact if Musk does decide to turn the platform into a maximalist speech Thunderdome. The truth that the anti-“woke” warriors refuse to acknowledge is that the economic success of platforms depends on thoughtful, swift content moderation that strikes a balance between open dialogue and chaos. This morning, in a letter to advertisers in which he used the bloodless, platitudinal language of a veteran social-media executive, Musk wrote that Twitter cannot become a “free-for-all hellscape.”

Most of us understandably think of technology platforms in abstract terms. When tech titans like Musk or his text-message friends wonder what all those employees at Twitter are doing, they are, quite foolishly, looking at a social network as if it were a basic piece of machinery. “There’s often a supposition that sites like Twitter must work like a car; maybe they need some routine maintenance every year, but under the hood they mostly just work,” Goldman, the former Twitter VP, told me. But Twitter isn’t a car; it’s a living, breathing, dynamic entity.

Living, breathing things do one thing quite reliably: They eventually die, for all kinds of reasons. They die of natural causes, or because of direct harm. They die because of unforeseeable events. Musk very well could kill Twitter out of malice or hubris, or through calculated, boneheaded decisions. But one possibility seems more likely than others. If Twitter dies at the hands of this billionaire, the cause is likely to be tragically banal—neglect.