Excerpted from Ways of Being: Animals, Plants, Machines: The Search for a Planetary Intelligence by James Bridle. Published by Farrar, Straus and Giroux. Copyright © 2022 by James Bridle. All rights reserved.

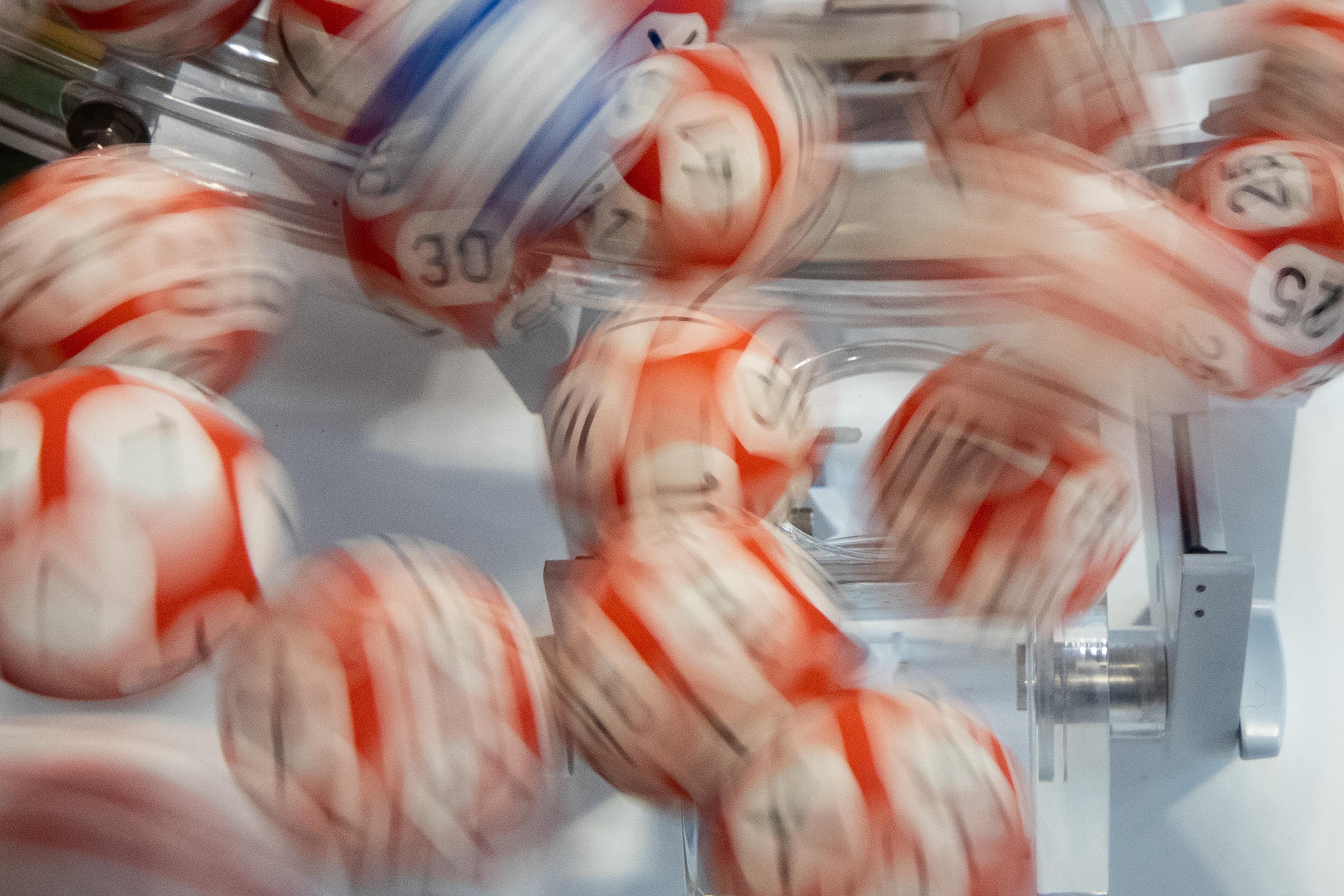

In Athens, around 300 BCE, at the very beginning of what we now call democracy, elections did not involve votes in a way we would recognize. Instead, all the major positions of government, from the parliament to criminal juries, were assigned by a method called sortition, or election by lottery. A machine called the kleroterion used a sequence of colored balls to determine who would occupy which post. While we think of ancient Greece as the birthplace of our modern electoral system, the Greeks themselves considered this machine-enabled random selection to be the cornerstone of their equality. Aristotle himself declared, “It is accepted as democratic when public offices are allocated by lot; and as oligarchic when they are filled by election.”

Millennia later, this randomness is absent not only from our elections but from our technology as well. According to the Ancient Greeks, this makes our machines incapable of being true agents of equality.

True randomness is a slippery thing: It is a property not of things in themselves, like individual numbers, but of their relationship to one another. One number is not random; it only becomes random in relation to a sequence of other numbers, and the degree of its randomness is a property of the whole group. You can’t be random, in modern parlance, without having some shared baseline of normality or appropriateness to measure yourself against. Randomness is relational.

The problem modern computers have with randomness is that it doesn’t make mathematical sense. You can’t program a computer to produce true randomness—wherein no element has any consistent, rule-based relationship to any other element—because then it wouldn’t be random. There would always be some underlying structure to the randomness, some mathematics of its generation, which would allow you to reverse-engineer and re-create it. Ergo: not random.

This is a major problem for all sorts of industries that rely on random numbers, from credit card companies to lotteries, because if someone can predict the way in which your randomness function operates, they can hack it, akin to a gambler sneaking marked cards into the game. This is in fact the way many such thefts have been performed. In 2010, an Iowa State Lottery official manipulated the lottery’s random number generator in such a way as to be able to predict the draw on certain days: He picked up at least $14 million before he was caught. In Arkansas, the Lottery Commission’s own deputy director of security stole more than 22,000 lottery tickets between 2009 and 2012 and won almost $500,000 in cash, again by manipulating the underlying code that picked the numbers.

To repeat, computers are incapable, by design, of generating truly random numbers, because no number produced by a mathematical operation is truly random. That’s precisely why many lotteries still use systems like rotating tubs of numbered balls: These are still harder to interfere with, and thus predict, than any supercomputer. Nevertheless, computers need random numbers for so many applications that engineers have developed incredibly sophisticated ways for obtaining what are called “pseudo-random” numbers: numbers generated by machines in such a way as to be effectively impossible to predict. Some of these are purely mathematical, such as taking the time of day, adding another variable like a stock market price, and performing a complex transformation on the result to produce a third number. This final number is so hard to predict that it is random enough for most applications—but if you keep using it, careful analysis will always reveal some underlying pattern. In order to generate true, uncrackable randomness, computers need to do something very strange indeed. They need to ask the world for help.

A case study in true machine randomness is ERNIE, the computer used to pick the Premium Bonds, a lottery run by the U.K. government since 1956. The first ERNIE (an acronym for Electronic Random Number Indicator Equipment) was developed by the engineers Tommy Flowers and Harry Fensom at the Post Office Research Station and was based on a previous collaboration of theirs—Colossus, the machine that cracked the Enigma code. ERNIE was one of the first machines to be able to produce true random numbers, but in order to do so it had to reach outside itself. Rather than simply doing math, it was connected to a series of neon tubes—gas-filled glass rods, similar to those used for neon lighting. The flow of the gas in the tubes was subject to all kinds of interference outside the machine’s control: passing radio waves, atmospheric conditions, fluctuations in the electrical power grid, and even particles from outer space. By measuring the noise in the tubes—the change in electrical flux within the neon gas, caused by this interference—ERNIE could produce numbers that were truly random: mathematically verifiable, but completely unpredictable.

Subsequent ERNIEs used ever more sophisticated versions of the same approach and closely followed the technological trends of their times. ERNIE 2, which debuted in 1972, was half the size of its predecessor and designed specifically to resemble one of the computers in the James Bond film Goldfinger. ERNIE 3, which followed in 1988, was the size of a desktop computer. It took just five and a half hours to complete the draw, five times faster than its predecessor. ERNIE 4 reduced that time to two and a half hours and dispensed with the neon tubes, using the thermal noise of its internal transistors, together with a sophisticated algorithm. ERNIE’s most recent incarnation, ERNIE 5, has since March 2019 been picking the Premium Bonds by examining the quantum properties of light itself.

ERNIE maps the evolution of computers themselves over 70 years, from room-sized tangles of wires and circuit boards, through bulky mainframes and desktop boxes, all the way down to the development of highly specialized, microscopic chips of silicon with the ability to appraise individual photons. But each incarnation has done something few machines do: It has looked outside of its own circuitry, in order to commune with the more-than-human world that surrounds it, in the service of true randomness.

In the intervening years, other creative ways of generating randomness with machines have been developed. Lavarand, initially proposed as a joke by workers at supercomputer firm Silicon Graphics, uses a digital camera pointed at a lava lamp to draw truly random numbers from the lamp’s endless, chaotic fluctuations. Online security firm Cloudflare, which protects thousands of websites from hacking and other disruption, actually put Lavarand to work: At Cloudflare’s headquarters in San Francisco, shelves lined with 80 lava lamps provide a back-up source of randomness for their digital servers. Hotbits, another hobbyist project, uses a radiation detector pointed at a sample of radioactive Cesium-137, which produces beta particles at random intervals as it decays. Random.org, a popular online source for true random numbers, started out using a $10 receiver from RadioShack to measure atmospheric radio noise; it now consists of a network of aerials and processing stations around the world.

Slate receives a commission when you purchase items using the links on this page. Thank you for your support.

Each of these machines is admitting to the same flaw: Given the way we have constructed them, computers are not capable, operating alone, of true randomness. To exercise this crucial faculty, they must be connected to such diverse sources of uncertainty as fluctuations in the atmosphere, decaying minerals, shifting globules of heated wax and the quantum dance of the universe itself. On the other hand, they are confirming something beautiful. In order to be full and useful participants in the world, computers need to have relations with it. They need to touch and be in touch with the world. This stands in stark opposition to the way we build most of them today: systems of inscrutable, inhuman logic, comprehensible only partly by a narrow cadre of highly trained, and highly privileged engineers, and based on systems of extraction, manufacture, and use that damage the planet in multiple ways, from large-scale mineral mining, through the heat and greenhouse gases produced by server farms, to vast fields of electronic waste.

But the use of randomness, both the processes it invokes, and the radical equality it makes possible, suggests it doesn’t have to be this way. We can reimagine our technologies—and our political systems—in ways which are less extractive, more generative, and ultimately more just. We might just have to get random to do it.

Future Tense is a partnership of Slate, New America, and Arizona State University that examines emerging technologies, public policy, and society.